Comprehensive Confidence Intervals for Python Developers

Category > Statistics

Sep 08, 2019Confidence interval is uncertainty in summary statistic represented as a range. In the other words, it is a range of values we are fairly sure our true value lies in. For example: I am 95% confident that the population mean falls between 8.76 and 15.88 $\rightarrow$ (12.32 $\pm$ 3.56)

Confidence interval tells you how confident you can be that the results from a poll or survey reflect what you would expect to find if it were possible to survey the entire population. It is difficult to obtain measurement data of an entire data set (population) due to limited resource & time. Your best shot is to survey a small fraction (samples) of the entire data set, and pray that your sample data represents the population reasonably well.

Sample data may not be a good representation of a population by numerous factors (Ex: bias), and as a result, uncertainty is always introduced in any estimations derived from sample data. Due to the uncertainty involved with sample data, any statistical estimation needs to be delivered in a range, not in a point estimate.

How well a sample statistic estimates an underlying population parameter is always an issue (Population vs. Samples). A confidence interval addresses this issue by providing a range of values, which is likely to contain the population parameter of interest within the range of uncertainty.

Contents

- 1.Understanding confidence interval with analogy

- 2.Key takeaways

- 3.Population vs Samples

- Notes:Population variance $\sigma^2$ vs. Sample variance $s^2$

- Pythonic Tip:Difference between Numpy variance and Pandas variance

- 4.Confidence interval of normal distribution

- 4.1.Confidence interval of mean

- Notes:Distribution of various statistics

- Notes:z-score vs t-score

- Pythonic Tip:Computing confidence interval of mean with SciPy

- 4.2.Confidence interval of difference in mean

- Notes:Comparing means of more than two samples with ANOVA

- 4.2.1.Independent (unpaired) samples, equal variance - Student's t-interval

- 4.2.2.Independent (unpaired) samples, unequal variance - Welch's t-interval

- 4.2.3.Dependent (paired) samples - Paired t-interval

- Notes:Deciding which t-test to use

- 4.3.Confidence interval of variance

- Notes:Chi-square $\chi^2$ distribution

- Notes:One-tail vs two-tail

- Pythonic Tip:Computing confidence interval of variance with SciPy

- 4.4.Confidence interval of other statistics: Bootstrap

- 5.Confidence interval of non-normal distribution

1. Understanding confidence interval with analogy¶

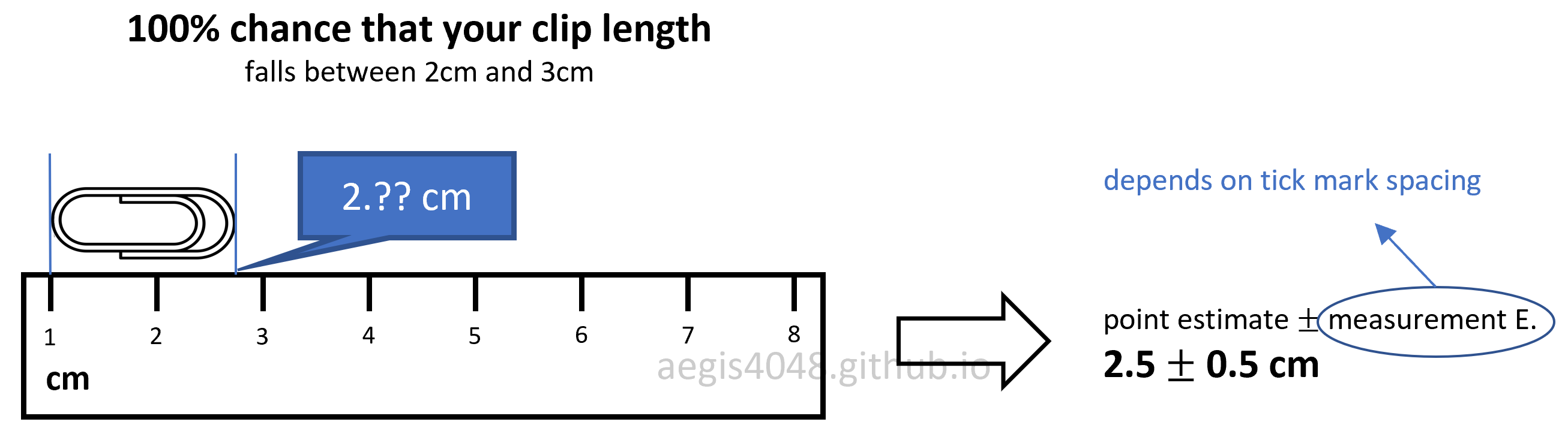

If you've taken a science class with lab reports in your highschool or college, you probably had to include measurement error in your lab reports. For example, if you were asked to measure the length of a paper clip with a ruler, you have to include $\pm0.5 \,\text{cm}$ or $\pm0.05\,\text{cm}$ (depending on the spacing of tick marks) to account for the measurement error that shows the precision of your measuring tool.

Based on figure (1), the paper clip seems to be about 2.7 cm long, but we don't know for sure because the tickmarks in the ruler is not precise enough to measure decimal length. However, I can tell with 100% confidence that the paper clip has a length between 2 ~ 3 cm, because the clip is between the 2 cm and 3 cm tickmarks. You record the length of the paper clip in a range, instead of a point estimate, to account for the uncertainty introduced by the limitation of the measuring tool.

Figure 1: Measurement error in ruler

Similar idea can be applied to a confidence interval of mean. You want to obtain a mean of a whole data set (population), but you can measure values of only a small fraction (samples) of the whole data set. This boils down to the traditional issue of Population vs Samples, due to the cost of obtaining measurement data of a large data set. Uncertainty is introduced in your samples, because you don't know if your samples are 100% representative of the population, free of bias. Therefore, you deliver your conclusion in a range, not in a point estimate, to account for the uncertainty.

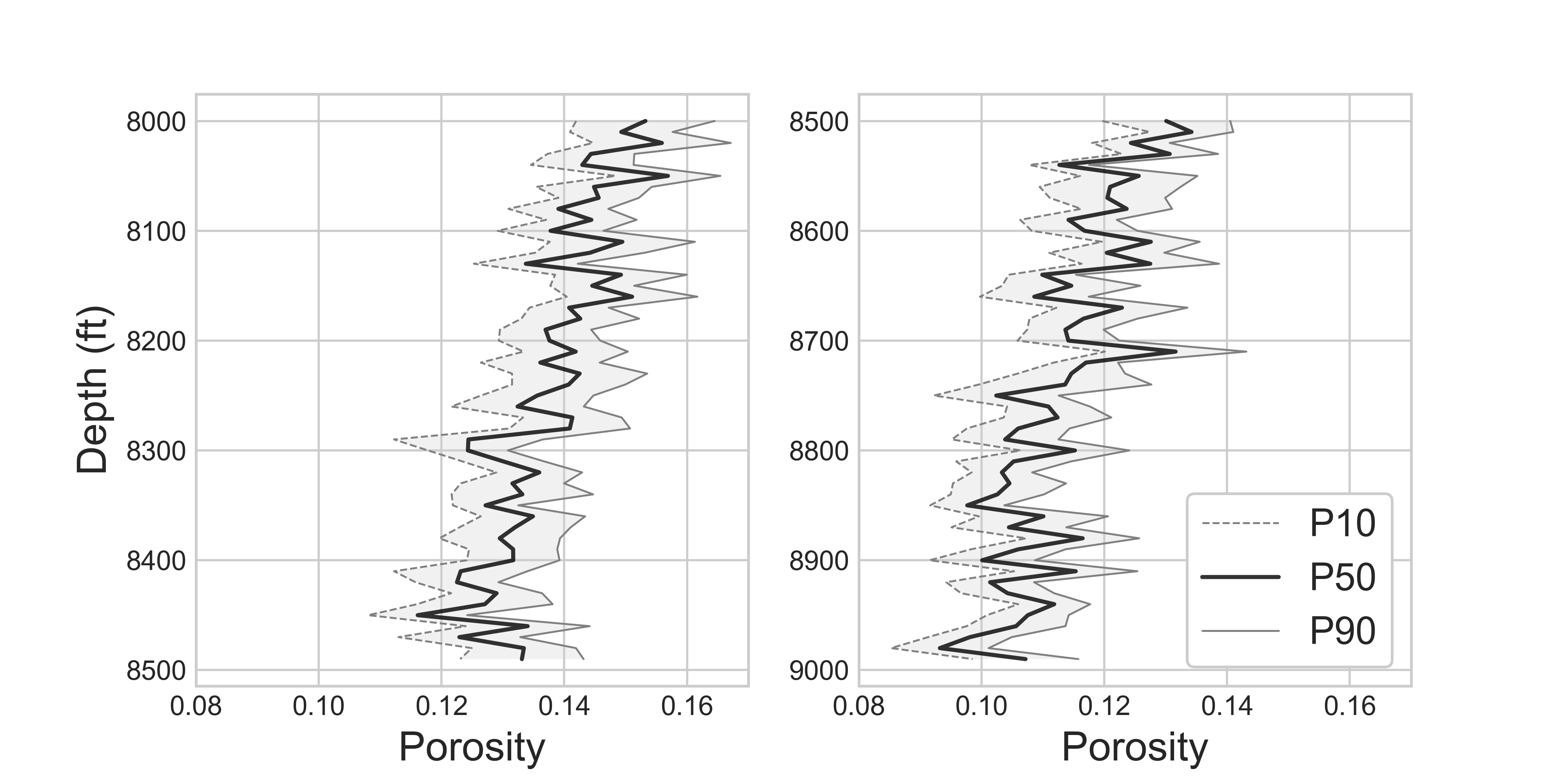

Example 1: Uncertainty in rock porosity

(Borrowed from Dr. Michael Pyrcz's Geostatistics class)

A reservoir engineer in the oil & gas industry wants to know the rock porosity of a formation to estimate the total oil reserve 9,500 ft underground. Due to the high cost of obtaining rock core samples from the deep formations, he could acquire only 12 rock core samples. Since the uncertainty of a point estimation scales inversely with a sample size, his estimation is subject to non-negligible uncertainty. He obtains 14.5% average rock porosity with 4.3% standard deviation. Executives in the company wants to know the worst-case scenario (P10) and the best-case scenario (P90) to make business decisions. You can convey your estimation of average porosity with uncertainty by constructing the confidence interval of mean.

Assuming that you have a reason to believe that the rock porosity follows normal distribution, you can construct its 80% confidence interval, with the procedure described below:

stats.t.interval(1 - 0.2, 12 - 1, loc=14.5, scale= 4.3 / np.sqrt(12))

The above range of uncertainty was acquired from the 12 rock core samples. In the worst-case scenario, the rock formation at 9,500 ft underground has 12.8% porosity. In the best-case scenario, the oil reservoir has 16.2% porosity. The same procedures can be applied for the core samples collected at different depths, which give us the confidence interval plot of rock porosities shown in figure (2).

Figure 2: Confidence interval of core samples porosities along depths

Source Code For Figure (2)

import numpy as np

from scipy import stats

import matplotlib.pyplot as plt

np.random.seed(39)

depth = [i * 10 + 8000 for i in range(100)]

l = len(depth)

avg_por = []

p10_por = []

p90_por = []

for i, item in enumerate(depth):

# You collect 12 rock core samples for each depth

# Assume that sample porosity follows a normal distribution

sample_size = 12

por_samples = np.random.normal(loc=0.15 - i/2000, scale=0.022, size=sample_size)

avg_por.append(np.mean(por_samples))

# 80% confidence interval of mean

p10, p90 = stats.t.interval(1 - 0.2, sample_size - 1, loc=np.mean(por_samples), scale=stats.sem(por_samples))

p10_por.append(p10)

p90_por.append(p90)

# plotting

plt.style.use('seaborn-whitegrid')

fig, ax = plt.subplots(1, 2, figsize=(8, 4))

ax[0].plot(avg_por[:l//2], depth[:l//2], 'k', label='P50', alpha=0.8)

ax[0].plot(p10_por[:l//2], depth[:l//2], 'grey', linewidth=0.7, label='P10', linestyle='--')

ax[0].plot(p90_por[:l//2], depth[:l//2], 'grey', linewidth=0.7, label='P90')

ax[0].set_xlim(0.08, 0.17)

ax[0].set_ylabel('Depth (ft)', fontsize=15)

ax[0].set_xlabel('Porosity', fontsize=15)

ax[0].fill_betweenx(depth[:l//2], p10_por[:l//2], p90_por[:l//2], facecolor='lightgrey', alpha=0.3)

ax[0].invert_yaxis()

ax[1].plot(avg_por[l//2:], depth[l//2:], 'k', label='P50', alpha=0.8)

ax[1].plot(p10_por[l//2:], depth[l//2:], 'grey', linewidth=0.7, label='P10', linestyle='--')

ax[1].plot(p90_por[l//2:], depth[l//2:], 'grey', linewidth=0.7, label='P90')

ax[1].set_xlim(0.08, 0.17)

ax[1].set_xlabel('Porosity', fontsize=15)

ax[1].legend(loc='best', fontsize=14, framealpha=1, frameon=True)

ax[1].fill_betweenx(depth[l//2:], p10_por[l//2:], p90_por[l//2:], facecolor='lightgrey', alpha=0.3)

ax[1].invert_yaxis()

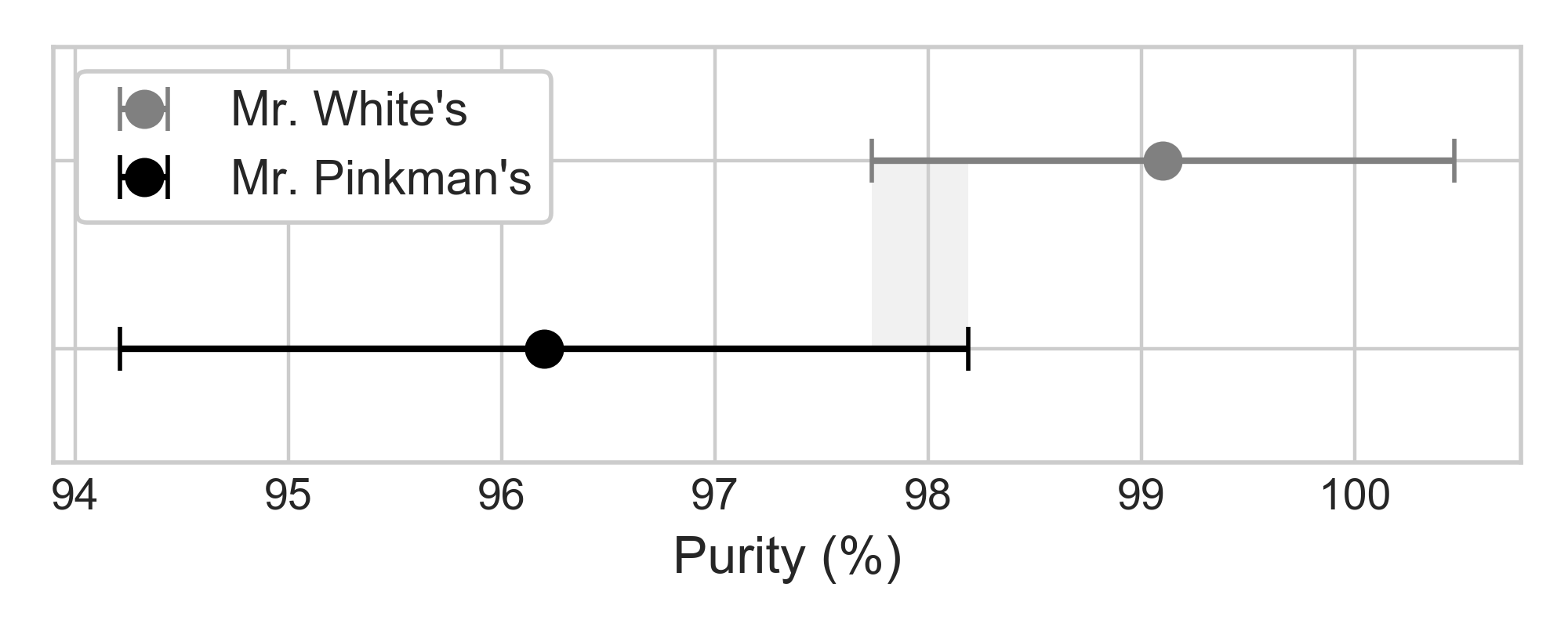

Example 2: Purity of methamphetamine (crystal) in Breaking Bad

21 batches of crystal cooked by Mr. White shows 99.1% average purity with 3% standard deviation. 18 batches of crystal cooked by Mr. Pinkman shows 96.2% average purity with 4% standard deviation. Does Mr. White always cook better crystal than Mr. Pinkman, or is it possible for Mr. Pinkman to beat Mr. White in purity of cooked crystals, by luck? We can construct 95% confidence interval assuming normal distribution, with the procedure described below:

# Mr. White's

stats.t.interval(1 - 0.05, 21 - 1, loc=99.1, scale= 3 / np.sqrt(21))

# Mr. Pinkman's

stats.t.interval(1 - 0.05, 18 - 1, loc=96.2, scale= 4 / np.sqrt(18))

There's a small overlap between the confidence intervals of Mr. White's and Mr. Pinkman's. Although it is true that Mr. White is a better cooker, Mr. Pinkman can cook a purer batch of crystals by a small chance, if he has the luck. Comparing the means of two sample data sets is closely related to constructing confidence interval of difference in mean.

Figure 3: Overlap in the 95% confidence interval of two samples

Source Code For Figure (3)

import matplotlib.pyplot as plt

from scipy import stats

import numpy as np

conf_pinkman = stats.t.interval(1 - 0.05, 18 - 1, loc=96.2, scale= 4 / np.sqrt(18))

conf_white = stats.t.interval(1 - 0.05, 21 - 1, loc=99.1, scale= 3 / np.sqrt(21))

plt.style.use('seaborn-whitegrid')

fig, ax = plt.subplots(figsize=(5, 2))

ax.errorbar(99.1, 1, xerr=(conf_white[1] - conf_white[0]) / 2,

fmt='o', markersize=8, capsize=5, label='Mr. White\'s', color='grey')

ax.errorbar(96.2, 0, xerr=(conf_pinkman[1] - conf_pinkman[0]) / 2,

fmt='o', markersize=8, capsize=5, label='Mr. Pinkman\'s', color='k')

ax.set_ylim(-0.6, 1.6)

ax.fill_betweenx([1, 0], conf_white[0], conf_pinkman[1], facecolor='lightgrey', alpha=0.3)

ax.legend(loc='best', fontsize=11, framealpha=1, frameon=True)

ax.set_xlabel('Purity (%)', fontsize=12)

ax.yaxis.set_major_formatter(plt.NullFormatter())

fig.tight_layout();

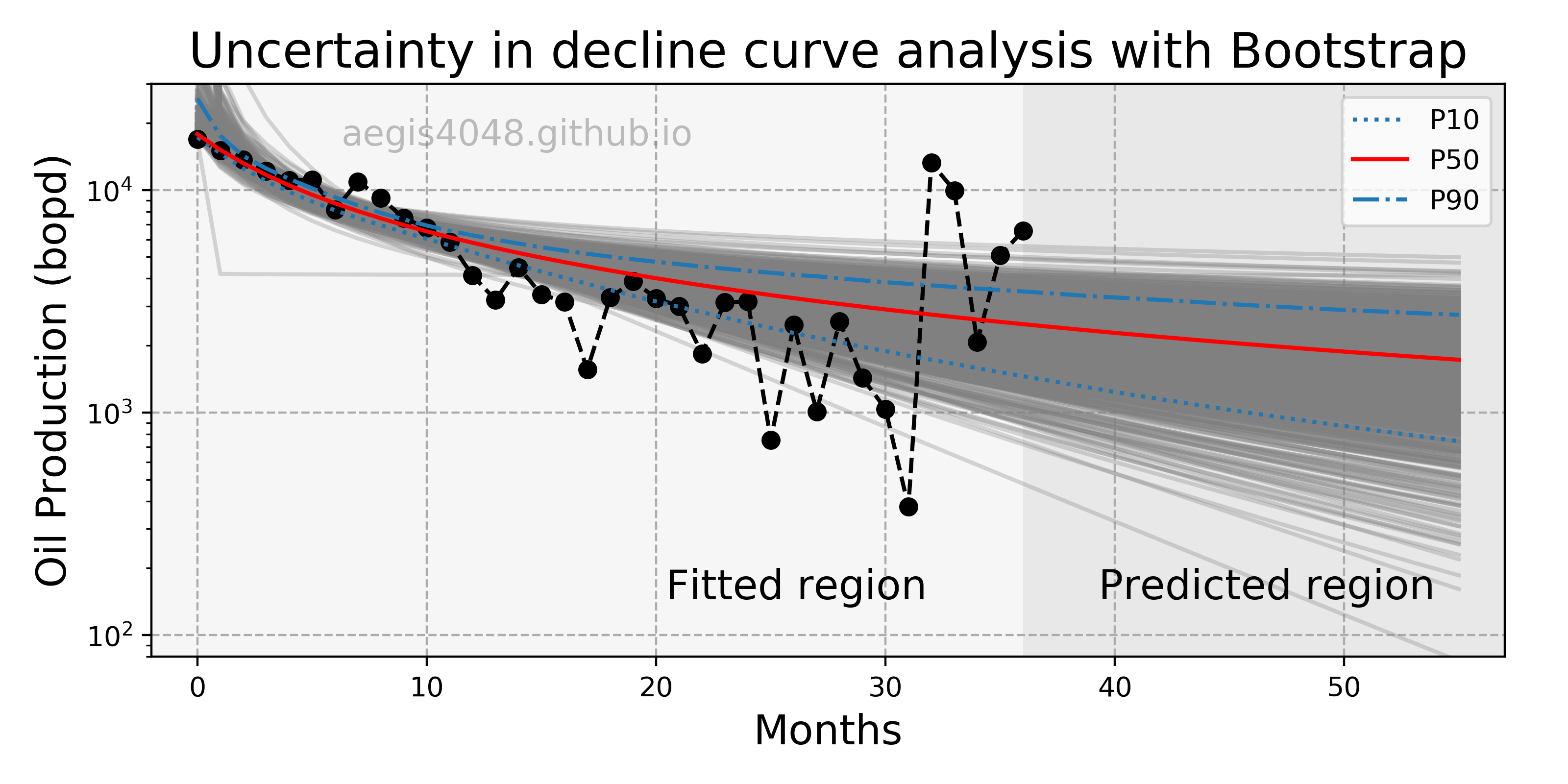

Example 3: Uncertainty in oil production forecast

A production engineer in the oil & gas industry wants to know the worst-case scenario (P10) and the best-case scenario (P90) of hydrocarbon production forecast. In petroleum engineering, we use a technique called Decline Curve Analysis (DCA) to project future hydrocarbon production. It is important to quantify the uncertanties of your DCA model, as the uncertainty in oil production can be as large as millions of dollars worth; executives in a company make business decisions based on P10 and P90 values. There are mainly three models for DCA: exponential, hyperbolic, and harmonic. For demonstration purpose, hyperbolic model will be used here. Hyperbolic decline curve can be defined as:

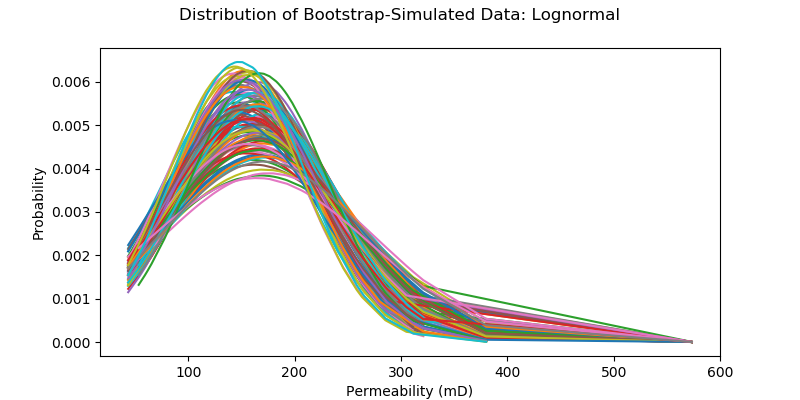

It is a non-linear regression problem with three parameters to optimize: $Di$, $q_i$, and $b$. The independent variable is time $t$. We can construct the confidence interval of regression model to get P10 and P90 values. Since the analytical solution for the confidence interval of non-linear model is complicated, and the data is not normally distributed, we use non-parametric numerical alternative, bootstrap, to obtain the following uncertainty forecast model:

Figure 4: Uncertainty in decline curve analysis forecast

Source Code For Figure (4)

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from scipy.optimize import curve_fit

##################################### Prepare Data #########################################

file = 'https://aegis4048.github.io/downloads/notebooks/sample_data/decline_curve.xlsx'

df = pd.read_excel(file, sheet_name='sheet_1')

df = df[df['Oil Prod. (bopd)'] > 200] # remove bad data points

t = df['Time'][1:].values[: -40]

y = df['Oil Prod. (bopd)'][1:].values[:-40]

x = np.array([i for i in range(len(t))])

#################################### Define function #######################################

def hyperbolic(x, qi, b, Di):

return qi / (1 + b * Di * x) ** (1 / b)

################################# Bootstrap regression #####################################

np.random.seed(42)

y_boot_reg = []

for i in range(1000):

# Bootstrapping

boot_index = np.random.choice(range(0, len(y)), len(y))

x_boot = x[boot_index]

y_boot = y[boot_index]

# Curve fit data

popt, pcov = curve_fit(hyperbolic, x_boot, y_boot, maxfev=100000, p0=[max(y), 0.1, 0.1])

# Define predicted region

pred_x = [i for i in range(x[-1], x[-1] + 20)][1:]

x_new = np.append(x, np.array([pred_x]))

# Predict

y_boot_reg = y_boot_reg + [hyperbolic(x_new, *popt)]

y_boot_reg = np.array(y_boot_reg)

p10 = np.percentile(y_boot_reg, 10, axis=0)

p50 = np.percentile(y_boot_reg, 50, axis=0)

p90 = np.percentile(y_boot_reg, 90, axis=0)

# Basic curve fit

popt, pcov = curve_fit(hyperbolic, x, y, maxfev=100000, p0=[max(y), 0.1, 0.1])

###################################### Plotting ##########################################

fig, ax = plt.subplots(figsize=(8, 4))

for reg_sample in y_boot_reg:

ax.plot(x_new, reg_sample, color='grey', alpha=0.3)

ax.plot(x, y, '--o', color='k', alpha=1)

ax.plot(x_new, p10, ':',color='#1f77b4', alpha=1, label='P10')

ax.plot(x_new, hyperbolic(x_new, *popt), color='r', label='P50')

ax.plot(x_new, p90, '-.',color='#1f77b4', alpha=1, label='P90')

ax.set_yscale('log')

ax.set_ylim(80, 30000)

ax.set_xlim(-2, 57)

ax.set_xlabel('Months', fontsize=15)

ax.set_ylabel('Oil Production (bopd)', fontsize=15)

ax.set_title('Uncertainty in decline curve analysis with Bootstrap', fontsize=18)

ax.grid(True, linestyle='--', color='#acacac')

ax.axvspan(-2, x[-1], facecolor='#efefef', alpha=0.5)

ax.axvspan(x[-1], 57, facecolor='lightgrey', alpha=0.5)

ax.text(0.38, 0.1, 'Fitted region', fontsize=15,

transform=ax.transAxes, color='k')

ax.text(0.7, 0.1, 'Predicted region', fontsize=15,

transform=ax.transAxes, color='k')

ax.text(0.27, 0.91, 'aegis4048.github.io', fontsize=13, ha='center', va='center',

transform=ax.transAxes, color='grey', alpha=0.5)

ax.legend()

fig.tight_layout()

2. Key takeaways¶

For confidence interval of mean

For confidence interval of difference in mean

For confidence interval of proportion

For confidence interval of variance

For confidence interval of standard deviation

Different analytical solutions exist for different statistics. However, confidence interval for many other statistics cannot be analytically solved, simply because there are no formulas for them. If the statistic of your interest does not have an analytical solution for its confidence interval, or you simply don't know it, numerical methods like boostrapping can be a good alternative (and its powerful).

3. Population vs. samples¶

Confidence interval describes the amount of uncertainty associated with a sample estimate of a population parameter. One needs to have a good understanding of the difference between samples and population to understand the necessity of delivering statistical estimations in a range, a.k.a. confidence interval.

Figure 5: Population vs samples

Population: data set that contains all members of a specified group. Ex: ALL people living in the US.

Samples: data set that contains a part, or a subset, of a population Ex: SOME people living in the US.

Let's say that you are conducting a phone-call survey to investigate the society's perception of The Affordable Care Act (“Obamacare”). Since you can't call all 327.2 million people (population) in the US, you call about 1,000 people (samples). Your poll showed that 59% of the registered voters support Obamacare. This does not agree with the actual survey conducted in 2018; 53% favorable, 42% unfavorable (source). What could be the source of error?

Since (formal) president Obama is a member of the Democratic Party, the voters' response can be affected by their political preference. How could you tell that the 1,000 people you called happened to be mostly Democrats, who's more likely to support Obama's policy, because they share similar political view? The samples you collected could have been biased, but you don't that know for sure. Of course, the voters' response could be affected by many other factors like race, age, place of residence, or financial status. The idea is that, there will always be uncertainty involved with your estimation, because you don't have an access to the entire population.

Confidence interval is a technique that quantifies the uncertainty when estimating a population parameter from samples.

Notes: Population variance $\sigma^2$ vs. Sample variance $s^2$

Distinction between population parameter and sample parameter is important. In statistics, it is a common practice to denote population variance as $\sigma^2$, and sample variance as $s^2$. The distinction is important because different equations are used for each.

For population:

For samples:

The divisor $n-1$ is a correction factor for bias. Note that the correction has a larger proportional effect when $n$ is small than when $n$ is large, which is what we want because the more samples we have, the better the estimation. This idea is well explained on this StackExchange thread.

Pythonic Tip: Difference between Numpy variance and Pandas variance

Different libraries make different assumption about an input array. The default value of ddof is different for Pandas and Numpy, resulting in different variance. ddof represent degrees of freedom, and setting ddof=True or ddof=1 tells the variance function to calculate sample variance by accounting for the bias factor $n-1$ (recall that in Python, True==1.) Remember that there is a distinction between Population variance ($\sigma^2$) vs. Sample variance ($s^2$).

If you are confused which library is computing which variance (sample or population), just remember this: whatever library you are using, use ddof=True or ddof=1 to compute sample variance, and use ddof=False or ddof=0 to compute population variance.

import numpy as np

import pandas as pd

arr = pd.DataFrame([5,3,1,6])

# numpy, population

arr.values.var()

# numpy, sample

arr.values.var(ddof=1)

# pandas, population

arr.var(ddof=0)

# pandas, sample

arr.var()

4. Confidence interval of normal distribution¶

Computing confidence interval of a statistic depends on two factors: type of statistic, and type of sample distribution. As explained above, different formulas exist for different type of statistics (Ex: mean, std, variance), and different methods (Ex: boostrapping, credible interval, Box-Cox transformation) are used for non-normal data set.

We will cover confidence interval of mean, difference in mean and variance.

4.1. Confidence interval of mean¶

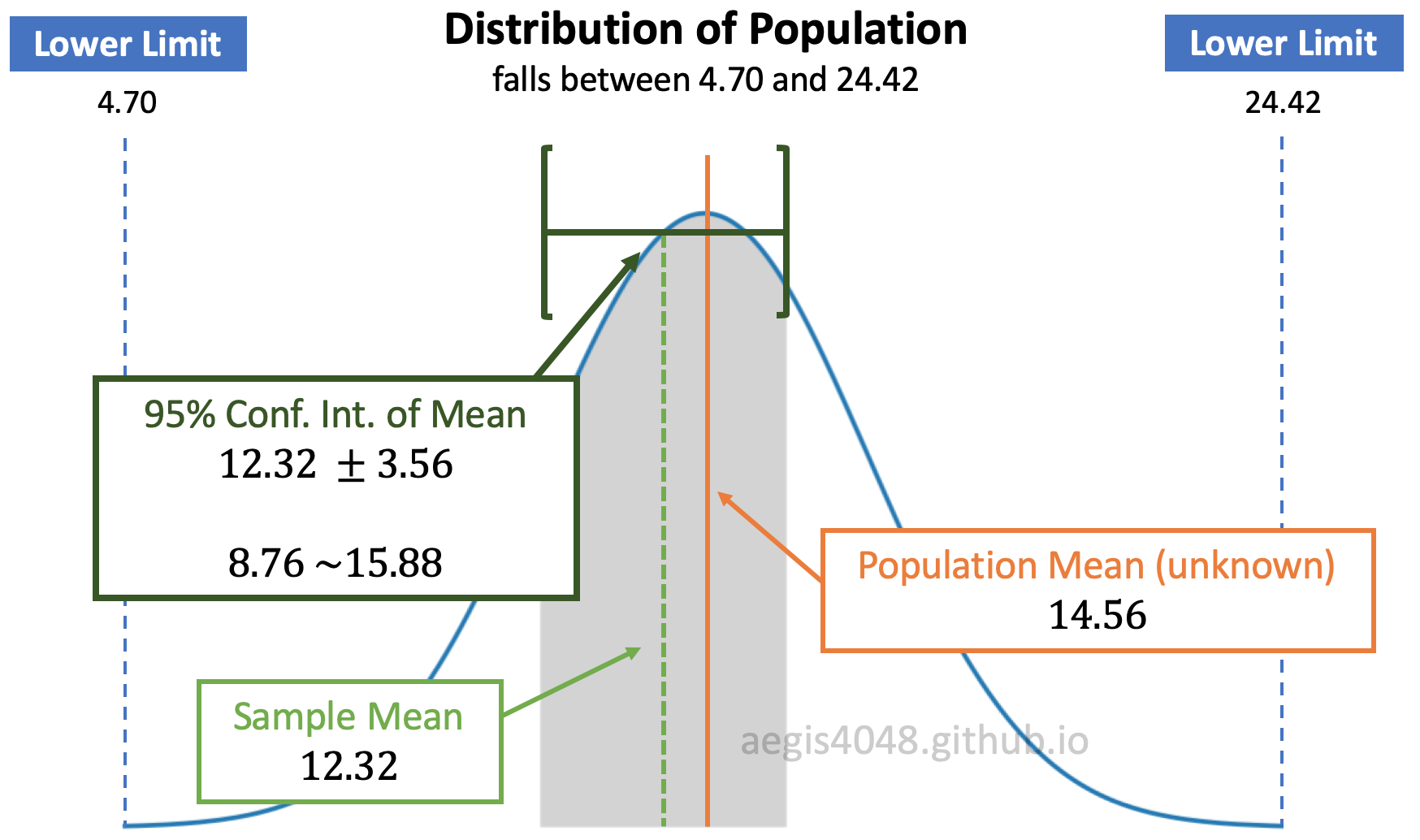

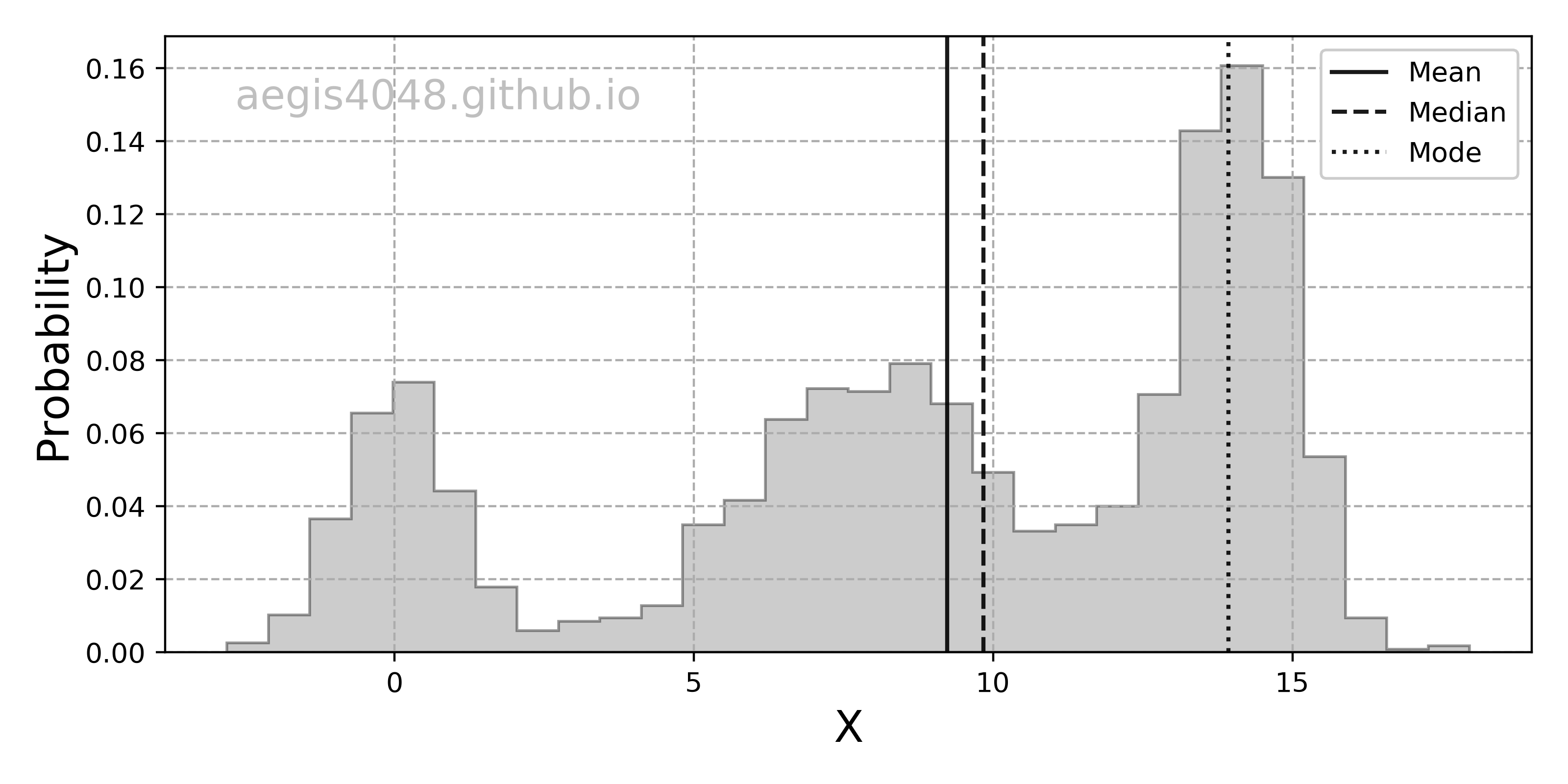

Confidence interval of mean is used to estimate the population mean from sample data and quantify the related uncertainty. Consider the following figure:

Figure 6: Distribution of population and C.I. of mean

In figure (6), assume that the population is normally distributed. Since we don't have an access to the entire population, we have to guess the population mean (unknown) to the best of our ability using sample data set. We do this by computing the sample mean and constructing its 95% confidence interval. Note that the popular choices of confidence level are: 90%, 95%, and 99%

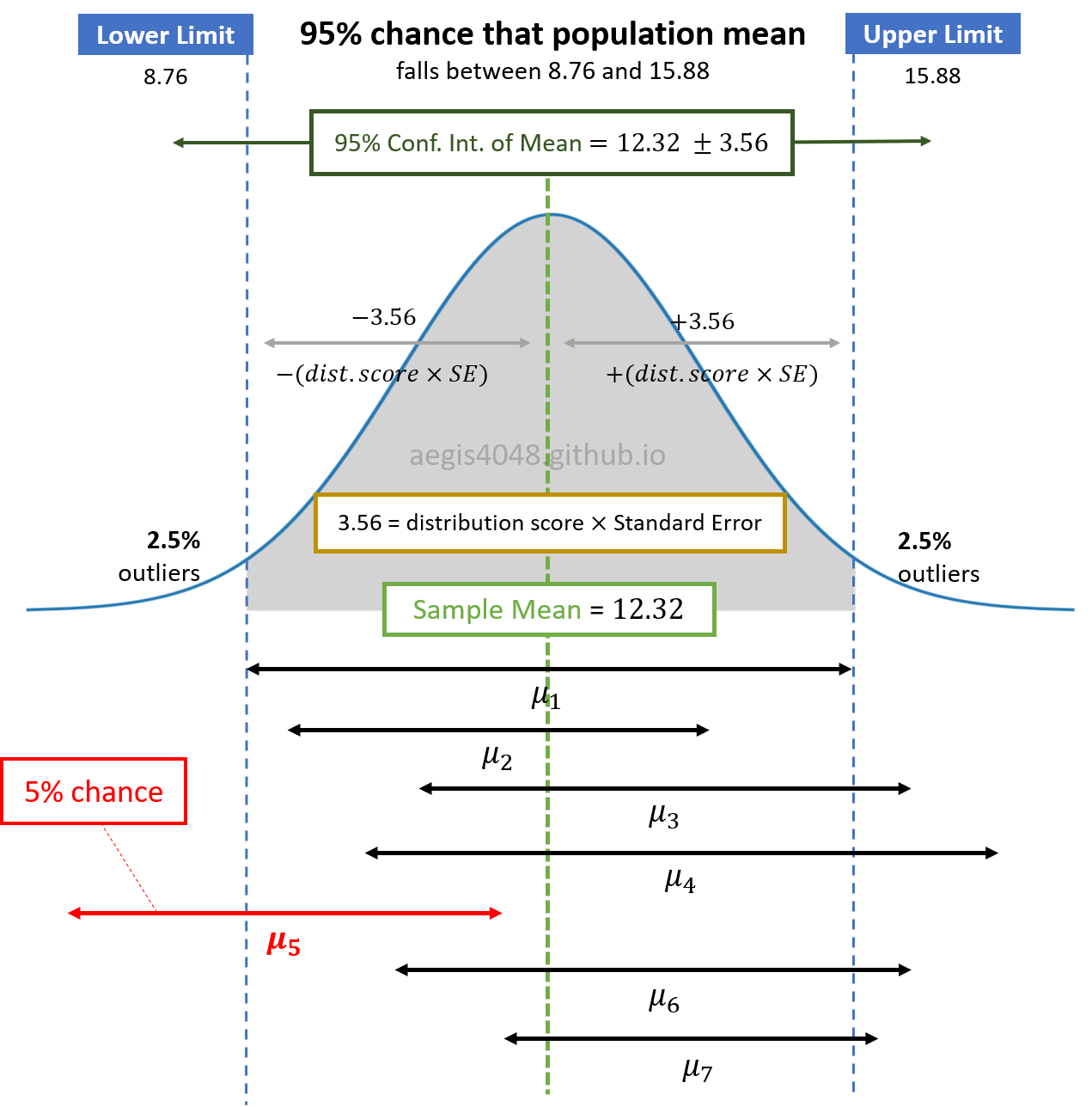

Assuming normality of population, its sample means are also normally distributed. Let's say that you have a population, and you draw small fractions of it $N$ times. Then, the computed means of $N$ sample sets $\boldsymbol{\mu}=(\mu_1, \mu_2,..., \mu_{N-1}, \mu_N)$ is normally distributed as shown in figure (7). Their confidence intervals are represented as the black horizontal arrows:.

Figure 7: Distribution of sample mean and its C.I.

You can see that the confidence interval of $\mu_5$ does NOT include the green vertical dashed line, 12.31. Let's assume that 12.31 is the true population mean (we never know if this is the actual population mean or not, but let's assume). If we get $\mu_5$ and its confidence interval as our estimation of the population mean, then our estimation is wrong. There is a 5% chance of this happening, because we set our confidence level as 95%. Note that the width of the confidence intervals (black horizontal arrows) depend on the sample size, as shown in eq (1)

The grey area of figure (6) is essentially equivalent to the grey area of figure (7). $\mu_1$ = 12.32 is the sample mean, and $\pm$ 3.56 is the uncertainty related to the sample mean with 95% confidence. The uncertainty is a product of distribution score and standard error of mean. Distribution score essentially tells how many standard error are the limits (8.76 and 15.88) away from the center (12.32). Choosing larger confidence level results in larger confidence interval. This increases the grey area in figure (6) and figure (7).

We convey 95% confidence interval of mean like this:

I am 95% confident that the population mean falls between 8.76 and 15.88. If I sample data 20 times, 19 times the sample mean will fall between 8.76 ~ 15.88, but expect that I will be wrong 1 time.

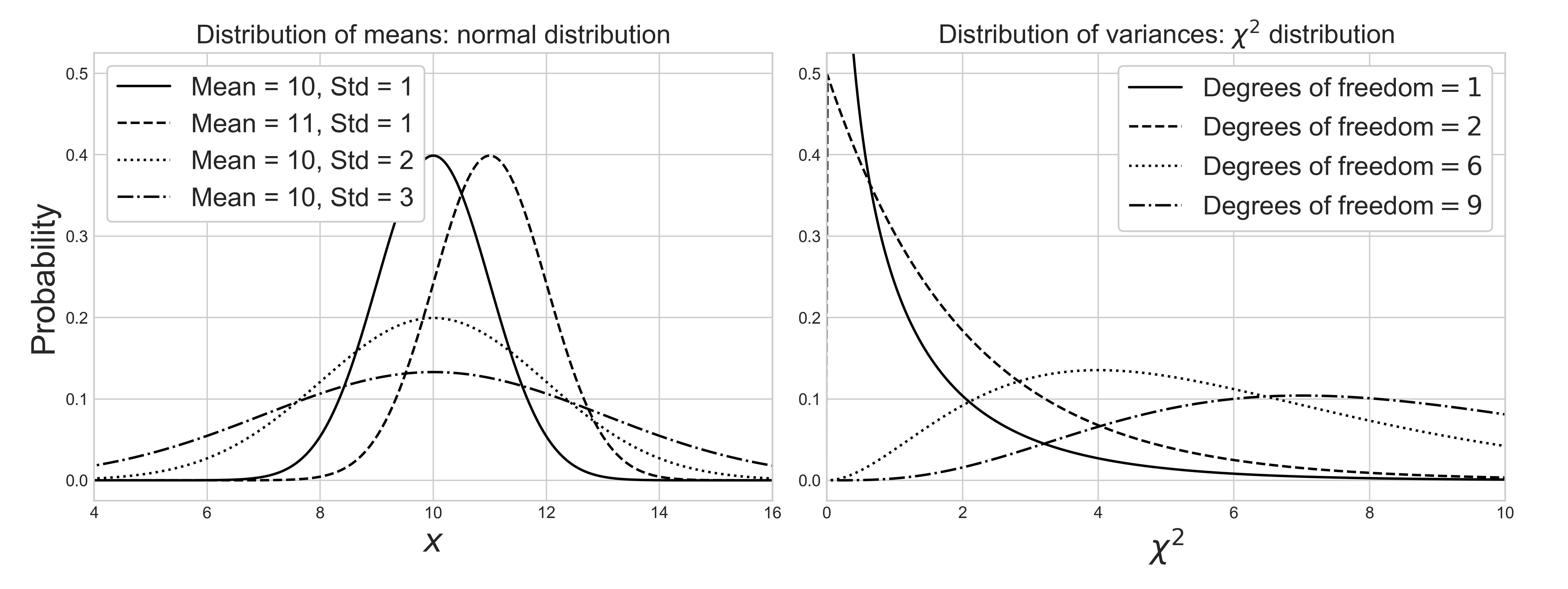

Notes: Distribution of various statistics

Different statistics exhibit different distributions. Normality of samples does not guarantee normality of its statistics. When the samples are normally distributed, their means are normally distributed, but their variances are chi-square $\chi^2$ distributed. More discussion about the distribution of variance and $\chi^2$ distribution is covered below. Note that these assumptions are invalid when samples are non-normal.

Source Code For The Figure

from scipy import stats

import matplotlib.pyplot as plt

import numpy as np

df_values = [1, 2, 6, 9]

linestyles = ['-', '--', ':', '-.']

normal_params = [(10, 1), (11, 1), (10, 2), (10, 3)]

x = np.linspace(-1, 20, 1000)

plt.style.use('seaborn-whitegrid')

fig, ax = plt.subplots(1, 2, figsize=(13.3, 5))

fig.tight_layout()

plt.subplots_adjust(left=0.09, right=0.96, bottom=0.12, top=0.93)

for df, norm_p, ls in zip(df_values, normal_params, linestyles):

ax[1].plot(x, stats.chi2.pdf(x, df, loc=0, scale=1),

ls=ls, c='black', label=r'Degrees of freedom$=%i$' % df)

ax[0].plot(x, stats.norm.pdf(x, loc=norm_p[0], scale=norm_p[1]),

ls=ls, c='black', label='Mean = %d, ' % norm_p[0] + 'Std = %s' % norm_p[1])

ax[0].set_xlim(4, 16)

ax[0].set_ylim(-0.025, 0.525)

ax[0].set_xlabel('$x$', fontsize=20)

ax[0].set_ylabel(r'Probability', fontsize=20)

ax[0].set_title(r'Distribution of means: normal distribution', fontsize=20)

ax[0].legend(loc='upper left', fontsize=16, framealpha=1, frameon=True)

ax[1].set_xlim(0, 10)

ax[1].set_ylim(-0.025, 0.525)

ax[1].set_xlabel('$\chi^2$', fontsize=20)

ax[1].set_title(r'Distribution of variances: $\chi^2$ distribution', fontsize=20)

ax[1].legend(loc='best', fontsize=16, framealpha=1, frameon=True)

If sample data is normal or normal-like distributed, we almost always assume t-distribution to compute confidence interval, as explained below. Then, the confidence interval of mean has the following analytical solution:

$\mu$

: sample mean

$n$

: number of samples

$df$

: degrees of freedom. In this example, df = $n$ - 1

Recall that when computing $s$, correction factor ($n-1$) is applied to account for sample bias, as explained above. Pay close attention to the standard error $\frac{s}{\sqrt{(n)}}$. As the sample size $n$ increases, the standard error decreases, reducing the range of confidence interval. This is intuitive in a sense that, the more samples we have, the less uncertainty we have with our statistical estimation. The length of the black horizontal arrows in figure (7) depends on the sample size. The larger the sample size, the narrower the width of arrows, and vice versa.

Notes: z-score vs t-score

You've probably seen mixed use of z-score and t-score for confidence interval during your studies. Long story short, it is safe and almost always better to use t-score than z-score.

Z-score ($z_{\frac{\alpha}{2}}$) is used for normal distribution, and t-score ($t_{\frac{\alpha}{2},df}$) is used for t-distribution. You use z-score if you know the population variance $\sigma^2$. If not, you use t-score. Since the population variance $\sigma^2$ is almost never known, you almost always use t-score for confidence interval. After all, the purpose of using confidence interval is to mitigate the issue of Population vs. Samples when estimating population parameter ($\sigma^2$) from samples. If you know the population parameters, you probably don't need confidence interval in the first place.

A natural question is, "how is it safe to use t-score instead of z-score? Shouldn't I be using z-score since I know that the population is normally distributed, from previous knowledge?" It is safe to do so because t-distribution converges to normal distribution according to the Centeral Limit Theorem. Recall that t-distribution behaves more and more like a normal distribution as the sample size increases.

Google "95% confidence z-score" and you will see $z$ = 1.96 at 95% confidence level. On the other hand, t-score approaches 1.96 as its degrees of freedom increases: $\lim_{df \to \infty}t$ = 1.96. For 95% confidence level, $t$ = 2.228 when $n$ - 1 = 10 and $t$ = 2.086 when $n$ - 1 = 20. This is why it is safe to always replace z-score with t-score when computing confidence interval.

Pythonic Tip: Computing confidence interval of mean with SciPy

We can compute confidence interval of mean directly from using eq (1). Recall to pass ddof=1 to make sure to compute sample standard deviation $s$, not population standard deviation $\sigma$, as explained above.

We will draw random samples from normal distribution using np.random.normal(). Note that loc is for population mean, and scale is for population standard deviation, and size is for number of samples to draw.

from scipy import stats

import numpy as np

np.random.seed(42)

arr = np.random.normal(loc=74, scale=4.3, size=20)

alpha = 0.05 # significance level = 5%

df = len(arr) - 1 # degress of freedom = 20

t = stats.t.ppf(1 - alpha/2, df) # t-critical value for 95% CI = 2.093

s = np.std(arr, ddof=1) # sample standard deviation = 2.502

n = len(arr)

lower = np.mean(arr) - (t * s / np.sqrt(n))

upper = np.mean(arr) + (t * s / np.sqrt(n))

(lower, upper)

Or we can compute with scipy.stats.t.interval(). Note that you don't divide alpha by 2, because the function does that for you. Also note that the standard error of mean $\frac{s}{\sqrt{n}}$ can be computed with scipy.stats.sem()

stats.t.interval(1 - alpha, len(arr) - 1, loc=np.mean(arr), scale=stats.sem(arr))

Note the default value of loc=0 and scale=1. This will assume sample mean $\mu$ to be 0, and standard error $\frac{s}{\sqrt{n}}$ to be 1, which assumes standard normal distribution of mean = 0 and standard deviation = 1. This is NOT what we want.

stats.t.interval(1 - alpha, len(arr) - 1)

4.2. Confidence interval of difference in mean¶

Confidence interval of difference in mean is not very useful by itself. But it is important to understand how it works, because it forms the basis of one of the most widely used hypothesis test: t-test.

Often we are interested in knowing if two distributions are significantly different. In the other words, we want to know if two sample data sets came from the same population by comparing central tendency of populations. A standard approach is to check if the sample means are different. However, this is a misleading approach in a sense that the means of samples are almost always different, even if the difference is microscopic. More useful would be to estimate the difference in a range to account for uncertainty, and compute probability that it is big enough to be of practical importance. T-test checks if the difference is "close enough" to zero by computing the confidence interval of difference in means.

T-test hypothesis

$\mu$

: sample mean

$H_0$

: null hypothesis — sample means are the same "enough"

: alternate hypothesis — sample means are "significantly" different

Note that the above hypothesis tests whether the mean of one group is significantly DIFFERENT from the mean of the other group; we are using two-tailed test. This does not check if the mean of one group is significantly GREATER than the mean of the other group, which uses one-tailed test.

Notes: Comparing means of more than two samples with ANOVA

Analysis of variance (ANOVA) checks if the means of two or more samples are significantly different from each other. Using t-test is not reliable in cases where there are more than 2 samples. If we conduct multiple t-tests for comparing more than two samples, it will have a compounded effect on the error rate of the result.

ANOVA has the following hypothesis:

where $L$ is the number of groups, and $\mu_a$ and $\mu_b$ belong to any two sample means of any groups. This article illustrates the concept of ANOVA very well.

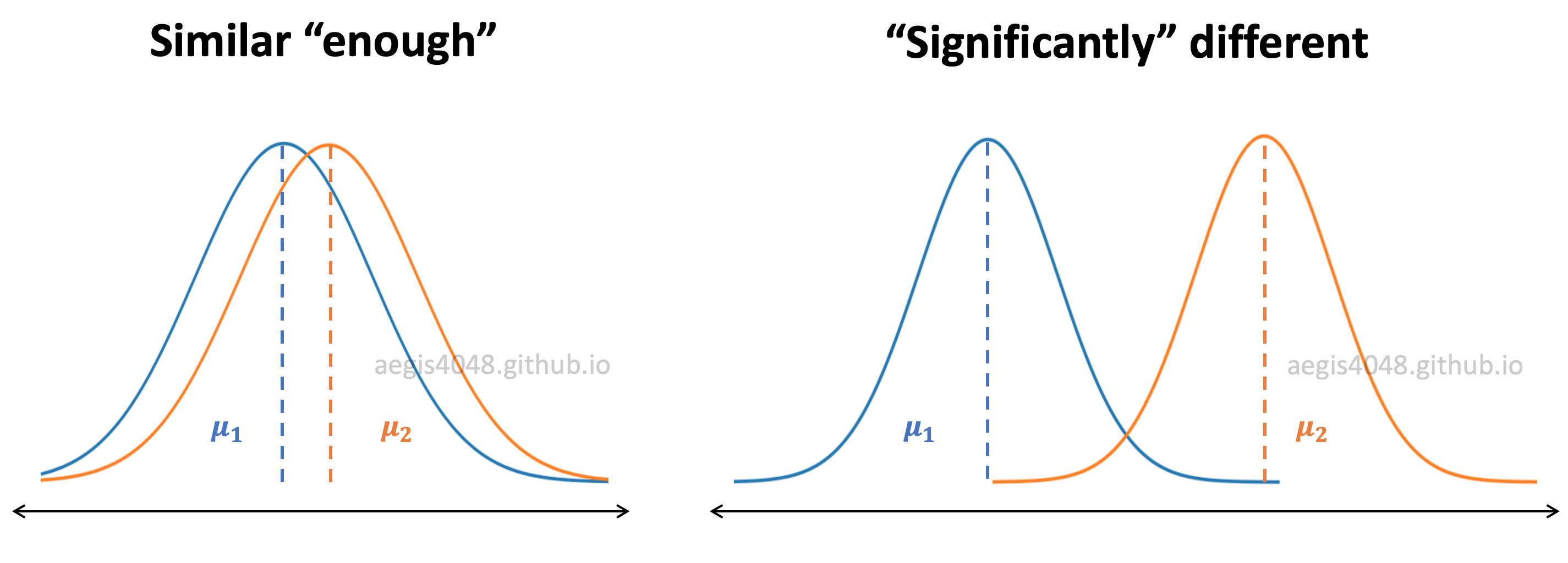

Figure 8: Distributions of samples

In figure (8), $\mu$ represents the sample mean. If two sample data sets are from the same population, the distribution of means will be similar "enough". If not, they will be "significantly" different. It can be visually inspected by the area of overlap. The larger the overlap, the bigger the chance of the two distributions originating from the same population.

The more robust way to compare sample means would be to construct the confidence interval of difference in means. If the two samples came from the same population, they should have the similar "enough" means. Their difference should be close to zero and satisfy (or fail to reject) the null hypothesis $H_0: \mu_1 - \mu_2 = 0$ within a range of uncertainty. Consider the following figure:

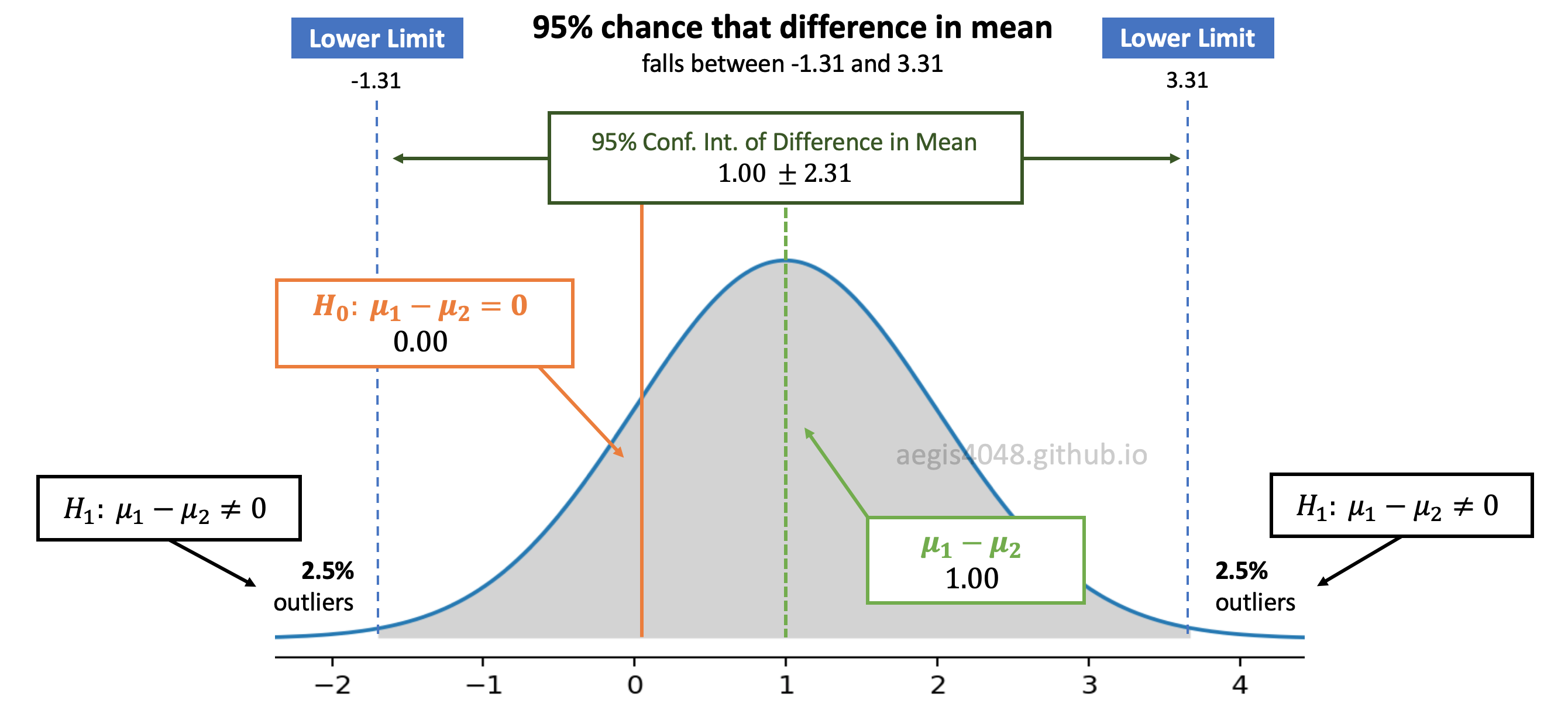

Figure 9: Distribution of difference in means

In figure (9), the calculated difference in sample means is $\mu_1 - \mu_2 = 1.00$. We deliver the uncertainty related to our estimation of difference in sample means by constructing its 95% confidence interval $[$-1.31 ~ 3.31$]$. Since the null hypothesis $H_0: \mu_1 - \mu_2 = 0$ is within the 95% confidence interval (grey shaded area), we accept the null hypothesis; we conclude that the samples have the same means within the uncertainty.

However, if the null hypothesis is not within the confidence interval and falls in the 2.5% outliers zone, we reject the null hypothesis and accept the alternate hypothesis $H_1: \mu_1 - \mu_2 \neq 0$. In the other words, we conclude that the sample means are significantly different.

Three variations of confidence interval of difference in means

There are three variations of t-test, and therefore there are three variations of confidence interval of difference in means. The difference & application of the three variations are really well-explained in Wikipedia (one of the few that are actually easy to understand, with minimum jargons.)

Independent (unpaired) samples, equal variance - Student's t-interval

Independent (unpaired) samples, unequal variance - Welch's t-interval

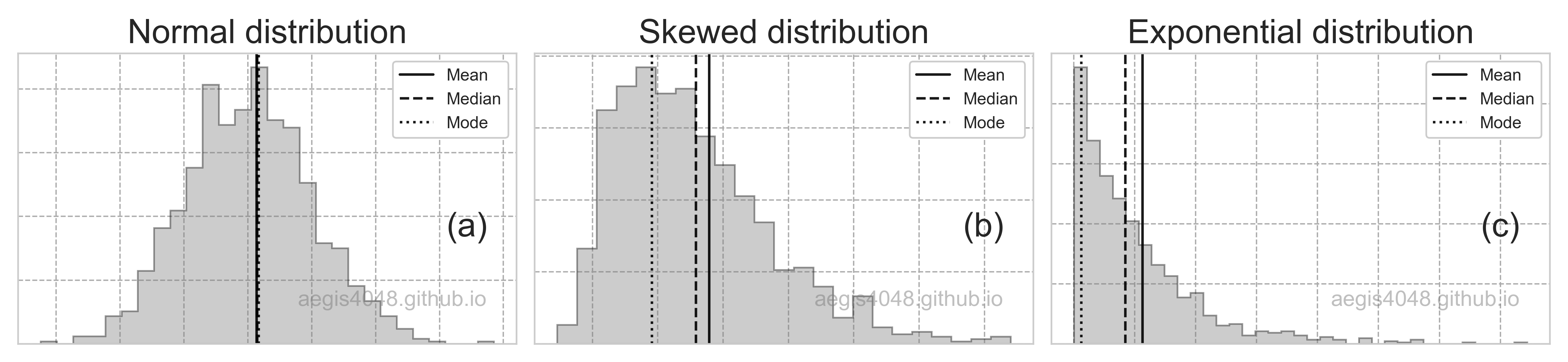

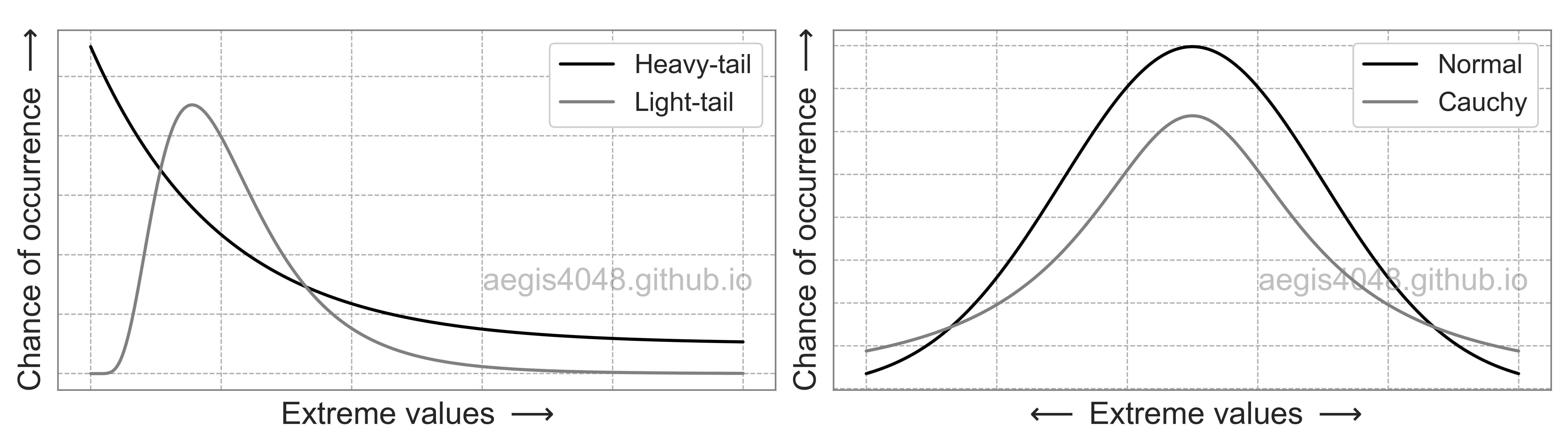

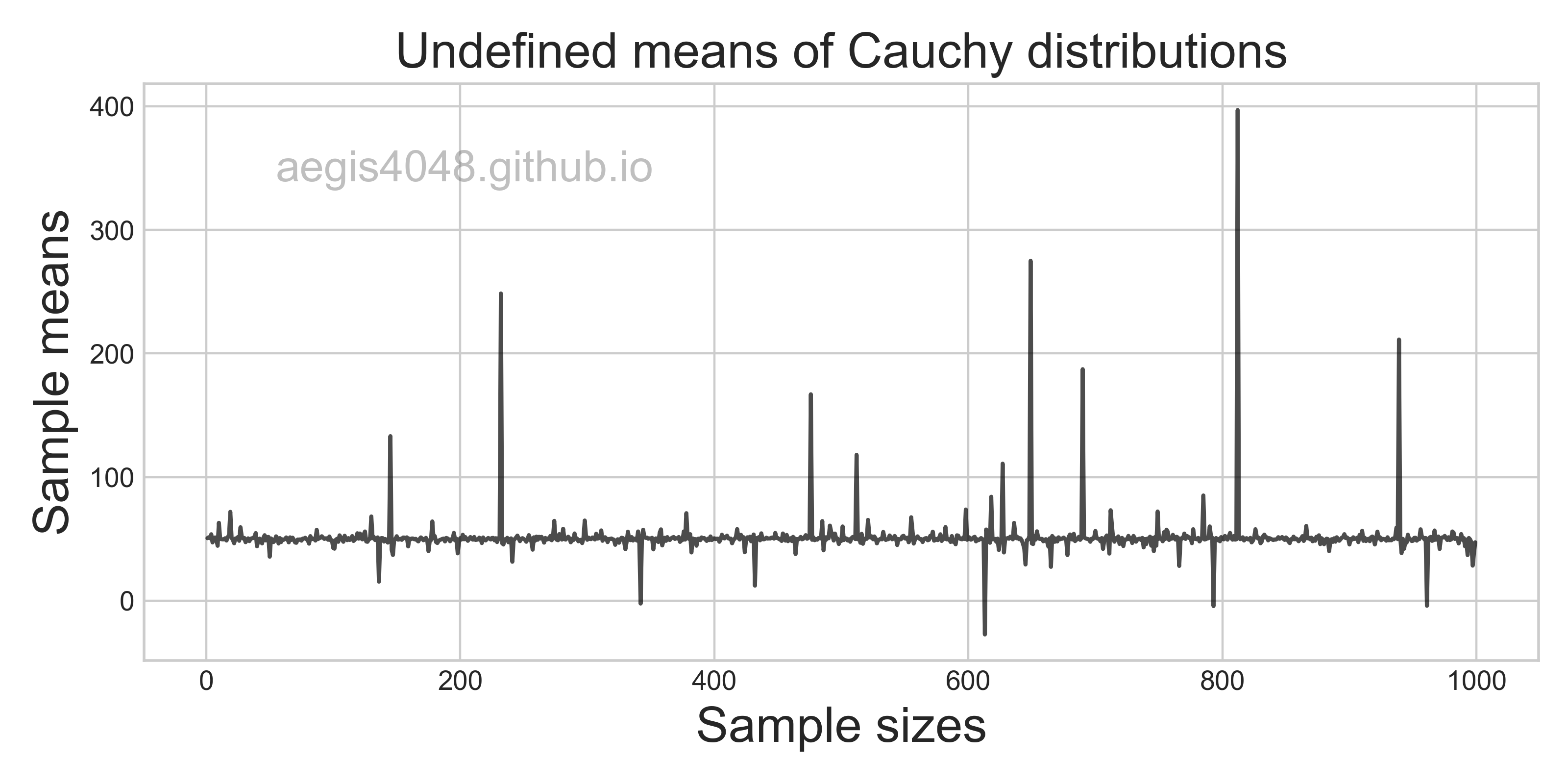

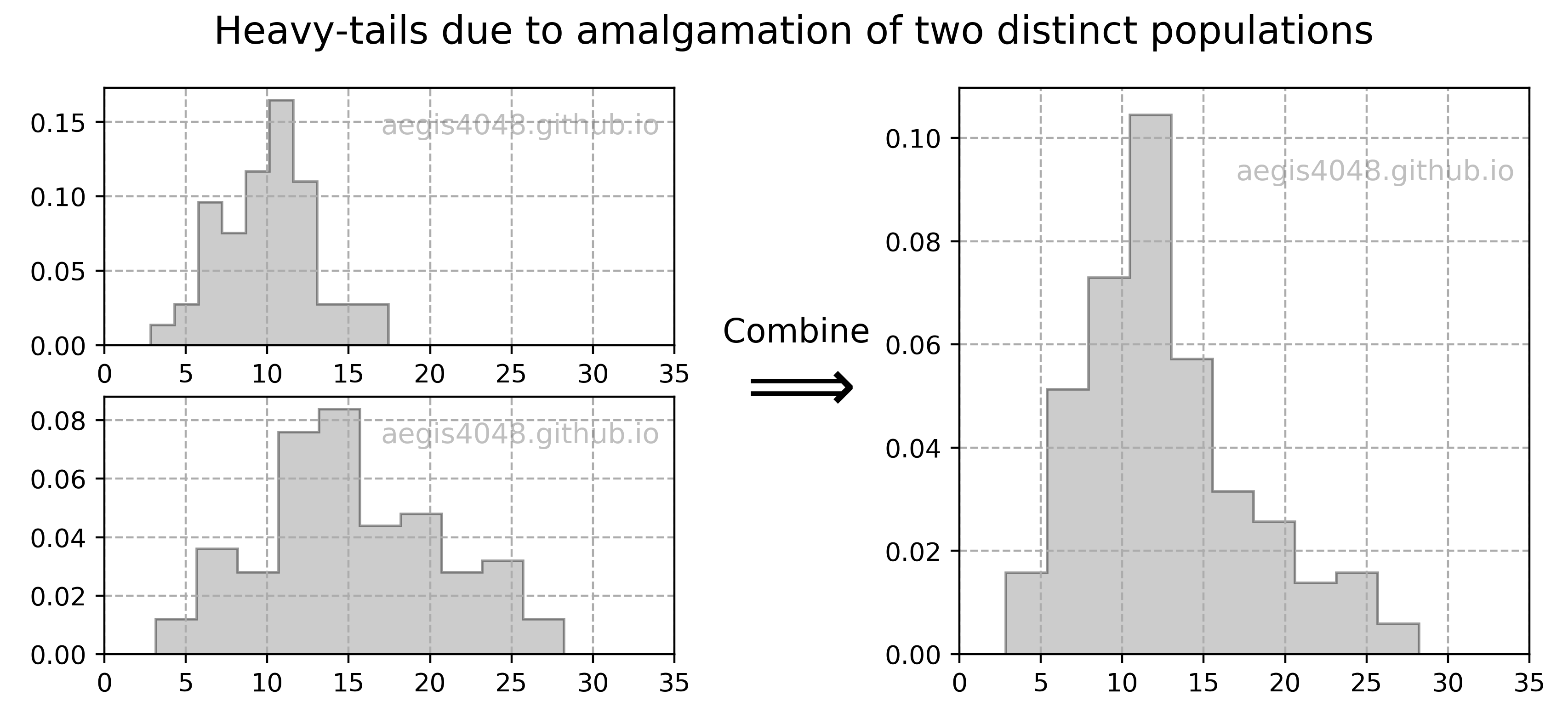

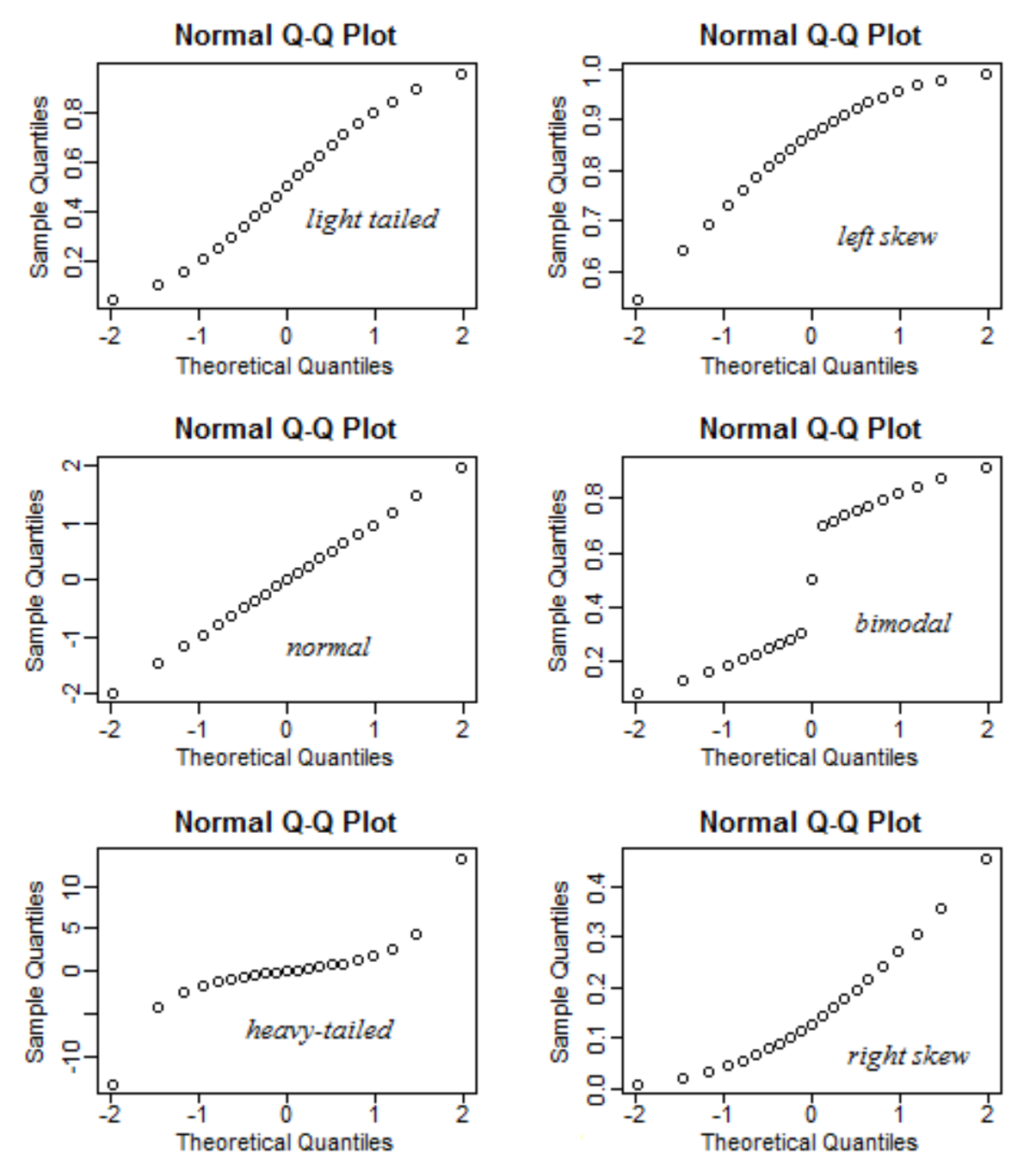

Recall that all t-tests assume normality of data. However, they are pretty robust to non-normality as long as the deviation from normality isn't large. Visualize your distributions to test this. Robustness of t-test to non-normality is discussed in detail below.

4.2.1. Independent (unpaired) samples, equal variance - student's t-interval¶

When you have a reason to believe that samples have nearly equal variances, you can use student's t-test to check if difference in means are significantly different. Note that student's t-test works pretty well even with unequal variances as long as sample sample sizes are equal or nearly equal, and sample sizes are not tiny.

However, it is recommended to always use Welch's t-test by assuming unequal variances, as explained below. Use student's t-test if you are ABSOLUTELY sure that the population variances are nearly equal.

Confidence interval of difference in mean assuming equal variance (student's t-interval) can be calculated as follows:

The formula for the pooled standard deviation $s_p$ looks a bit overwhelming, but its just an weighted average standard deviation of two samples, with bias correction factor $n_i-1$ for each sample. Recall that student's t-test assumes equal variances of two samples. You calculate what is assumed to be the common variance (=pooled variance, $s_p^2$) by computing the weighted average from each sample's variance.

In eq (4), $t$-score depends on significance level $\alpha$ and degrees of freedom $df$. In student's t-test, which assumes equal variance:

Pythonic Tip: Computing student's t-interval

Unfortunately, SciPy doesn't support computing confidence intereval of difference in mean separately. It is incorporated into computing t-statistic and p-value of t-test, but users can't access its underlying confidence interval. Note that in R, users have access to the CI of difference in means.

We can compute CI of difference in means assuming equal variance with eq (4). Don't forget to compute sample variance, instead of population variance by setting ddof=1 as explained above.

from scipy import stats

import numpy as np

x1 = [12.9, 10.2, 7.4, 7.0, 10.5, 11.9, 7.1, 9.9, 14.4, 11.3]

x2 = [10.2, 6.9, 10.9, 11.0, 10.1, 5.3, 7.5, 10.3, 9.2, 8.8]

alpha = 0.05 # significance level = 5%

n1, n2 = len(x1), len(x2) # sample sizes

s1, s2 = np.var(x1, ddof=1), np.var(x2, ddof=1) # sample variances

s = np.sqrt(((n1 - 1) * s1 + (n2 - 1) * s2) / (n1 + n2 - 2)) # pooled standard deviation

df = n1 + n2 - 2 # degrees of freedom

t = stats.t.ppf(1 - alpha/2, df) # t-critical value for 95% CI

lower = (np.mean(x1) - np.mean(x2)) - t * np.sqrt(1 / len(x1) + 1 / len(x2)) * s

upper = (np.mean(x1) - np.mean(x2)) + t * np.sqrt(1 / len(x1) + 1 / len(x2)) * s

(lower, upper)

The 95% confidence interval of difference in means has 0 within its interval. This means that the null hypothesis, $H_0: \mu_1 - \mu_2 = 0$ in figure (9), falls within the interval and we fail to reject the null hypothesis. We conclude that the sample means are not significantly different.

We can confirm this by running a formal hypothesis testing with scipy.stats.ttest_ind(), and setting equal_var=True. Note that this assumes independent t-test with pooled variance, which is equivalent to student's t-test.

stats.ttest_ind(x1, x2, equal_var=True)

The computed pvalue=0.229 is bigger than the significance level of alpha = 0.05, and therefore we fail to reject the null hypothesis, which is consistent with the conclusion drawn from the confidence interval of difference in mean.

Checking results with R:

a <- c(12.9, 10.2, 7.4, 7.0, 10.5, 11.9, 7.1, 9.9, 14.4, 11.3)

b <- c(10.2, 6.9, 10.9, 11.0, 10.1, 5.3, 7.5, 10.3, 9.2, 8.8)

t.test(a, b, var.equal = TRUE)

# Two Sample t-test

# data: a and b

# t = 1.2453, df = 18, p-value = 0.229

# 95 percent confidence interval:

# -0.8520327 3.3320327

# sample estimates:

# mean of x mean of y

# 10.26 9.02

4.2.2. Independent (unpaired) samples, unequal variance - Welch's t-interval¶

When comparing central tendency of normal distributions, it is safer, and therefore recommended to always use Welch's t-test, which assumes unequal variances of samples, as explained below. Equal variance t-test is not robust when population variances are different, but unequal variances are robust even when population variances are equal.

Confidence interval of difference in mean assuming unequal variance (Welch's t-interval) can be calculated as follows:

The formula is very similar to student's t-interval. There are two main differences:

1. We use each sample's own variance $s_1^2$ and $s_2^2$, instead of pooled (weighted average) variance $s_p^2$.

2. Degrees of freedom $df$ is computed with eq (7).

Pythonic Tip: Computing Welch's t-interval

The procedure is very similar to Computing student's t-interval. We will compute confidence interval of difference in mean assuming unequal variance, with eq (6). Although Scipy supports computing t-statistic for Welch's t-test, it doesn't support a function that allows us to compute Welch's t-interval. We will have to write our own codes to compute it.

Don't forget to compute sample variance, instead of population variance by setting ddof=1 as explained above.

from scipy import stats

import numpy as np

x1 = [12.9, 10.2, 7.4, 7.0, 10.5, 11.9, 7.1, 9.9, 14.4, 11.3]

x2 = [10.2, 6.9, 10.9, 11.0, 10.1, 5.3, 7.5, 10.3, 9.2, 8.8]

alpha = 0.05 # significance level = 5%

n1, n2 = len(x1), len(x2) # sample sizes

s1, s2 = np.var(x1, ddof=1), np.var(x2, ddof=1) # sample variances

df = (s1/n1 + s2/n2)**2 / ((s1/n1)**2/(n1-1) + (s2/n2)**2/(n2-1)) # degrees of freedom

t = stats.t.ppf(1 - alpha/2, df) # t-critical value for 95% CI

lower = (np.mean(x1) - np.mean(x2)) - t * np.sqrt(1 / len(x1) + 1 / len(x2)) * s

upper = (np.mean(x1) - np.mean(x2)) + t * np.sqrt(1 / len(x1) + 1 / len(x2)) * s

(lower, upper)

The 95% confidence interval of difference in means has 0 within its interval. This means that the null hypothesis, $H_0: \mu_1 - \mu_2 = 0$ in figure (9), falls within the interval and we fail to reject the null hypothesis. We conclude that the sample means are not significantly different.

We can confirm this by running a formal hypothesis testing with scipy.stats.ttest_ind(), and setting equal_var=False. Note that this assumes independent t-test with pooled variance, which is equivalent to student's t-test.

stats.ttest_ind(x1, x2, equal_var=False)

The computed pvalue=0.230 is bigger than the significance level of alpha = 0.05, and therefore we fail to reject the null hypothesis, which is consistent with the conclusion drawn from the confidence interval of difference in mean.

Checking results with R:

a <- c(12.9, 10.2, 7.4, 7.0, 10.5, 11.9, 7.1, 9.9, 14.4, 11.3)

b <- c(10.2, 6.9, 10.9, 11.0, 10.1, 5.3, 7.5, 10.3, 9.2, 8.8)

t.test(a, b, var.equal = FALSE)

# Welch Two Sample t-test

# data: a and b

# t = 1.2453, df = 16.74, p-value = 0.2302

# alternative hypothesis: true difference in means is not equal to 0

# 95 percent confidence interval:

# -0.8633815 3.3433815

# sample estimates:

# mean of x mean of y

# 10.26 9.02

4.2.3. Dependent (paired) samples - Paired t-interval¶

This test is used when the samples are dependent; that is, when there is only one sample that has been tested twice (repeated measures) or when there are two samples that have been matched or "paired" (paired or unpaired? read below.)

Confidence interval of difference in means assuming paired samples can be calculated as follows:

The equation is very similar to eq (1), except that we are computing mean and standard deviation of differences between before & after state of test subjects. Let's try to understand this with an example.

A school develops a tutoring program to improve the SAT scores of high school students. A school requires students to take tests before & after tutoring, and checks if the tutoring had a significant impact on the SAT scores of students. Because the test subjects are compared to themselves, not anyone elses, the measurements taken before & after the training are not independent.

To compute dependent t-interval, we compute differences of test scores before & after tutoring:

| Student # | $X_1$ | $X_2$ | $X_1$ - $X_2$ |

|---|---|---|---|

| 1 | 1480 | 1510 | -30 |

| 2 | 1280 | 1460 | -180 |

| 3 | 890 | 1320 | -430 |

| 4 | 340 | 700 | -360 |

| 5 | 1550 | 1550 | 0 |

| 6 | 1230 | 1420 | -190 |

| 7 | 1010 | 1340 | -330 |

| 8 | 1590 | 1570 | 20 |

| 9 | 1390 | 1500 | -110 |

| 10 | 980 | 1300 | -320 |

We find $\bar{d}$ = -193.0, and $s_d$ = 161.7. These values are plugged into eq (8). Degrees of freedom $df$ for dependent t-interval can be computed with:

Unlike independent t-test, in which two samples can have different sample sizes $n_1$ and $n_2$, depedent t-test has only one sample size, because the test subjects are compared to themselves.

Also note that dependent t-test assumes difference of test scores to be normally distributed, not test scores of students themselves. But as long as the test scores are normally distributed, the difference of test scores will also be normally distributed due to the property of normal distributions.

Pythonic Tip: Computing paired t-interval

Although Scipy supports computing t-statistic for dependent t-test, it doesn't support a function that allows us to compute dependent t-interval. We will have to write our own codes to compute it. You can compute it with eq (8)

Don't forget to compute sample standard devaition, instead of population standard deviation by setting ddof=1 as explained above.

from scipy import stats

import numpy as np

x1 = np.array([1480, 1280, 890, 340, 1550, 1230, 1010, 1590, 1390, 980])

x2 = np.array([1510, 1460, 1320, 700, 1550, 1420, 1340, 1570, 1500, 1300])

alpha = 0.05 # significance level = 5%

d_bar = np.mean(x1 - x2) # average of sample differences

s_d = np.std(x1 - x2, ddof=1) # sample standard deviation of sample differences

n = len(x1) # sample size

df = n - 1 # degrees of freedom

t = stats.t.ppf(1 - alpha/2, df) # t-critical value for 95% CI

lower = d_bar - t * s_d / np.sqrt(n)

upper = d_bar + t * s_d / np.sqrt(n)

(lower, upper)

The 95% confidence interval of difference in means for dependent samples does not have 0 within its interval. This means that the null hypothesis, $H_0: \mu_1 - \mu_2 = 0$ in figure (9), does not fall within the interval. Instead, our estimation falls within the 2.5% outlier zone on the left, $H_1: \mu_1 - \mu_2 \neq 0$. We reject the null hypothesis $H_0$, and accept the alternate hypothesis $H_1$. We conclude that the sample means are significantly different. In the other words, the tutoring program developed by the school had significant impact on the SAT score of its students.

We can confirm this by running a formal hypothesis testing with scipy.stats.ttest_rel(). Note that this assumes dependent t-test.

stats.ttest_rel(x1, x2)

The computed pvalue=0.004 is smaller than the significance level of alpha = 0.05, and therefore we reject the null hypothesis and accept the alternate hypothesis, which is consistent with the conclusion drawn from the confidence interval of difference in mean.

Notes: The above hypothesis testing answers the question of "Did this tutoring program had a significant impact on the SAT scores of students?". However, in cases like this, a more intuitive question is "Did this tutoring program significantly improve the SAT scores of students?" The former uses two-tailed test, and the latter uses one-tailed test, and the procedures for them are a little different.

Checking results with R:

x1 = c(1480, 1280, 890, 340, 1550, 1230, 1010, 1590, 1390, 980)

x2 = c(1510, 1460, 1320, 700, 1550, 1420, 1340, 1570, 1500, 1300)

t.test(x1, x2, paired=TRUE)

# Paired t-test

# data: x1 and x2

# t = -3.7753, df = 9, p-value = 0.004381

# alternative hypothesis: true difference in means is not equal to 0

# 95 percent confidence interval:

# -308.64568 -77.35432

# sample estimates:

# mean of the differences

# -193

Notes: Deciding which t-test to use

Equal or unequal variance?

Long story short, always assume unequal variance of samples when using t-test or constructing confidence interval of difference in means.

Student's t-test is used for samples of equal variance, and Welch's t-test is used for samples of unequal variance. A natural question is, how do you know which test to use? While there exist techniques to check homogeneity of variances (f-test, Barlett's test, Levene's test), it is dangerous to run hypothesis testing for equality of variances to decide which t-test to use (student's t-test or Welch's t-test), because it increases Type I error (asserting something that is absent, false positive). This is shown by Moser and Stevens (1992) and Hayes and Cai (2010).

Kubinger, Rasch and Moder (2009) argue that when the assumptions of normality and homogeneity of variances are met, Welch's t-test performs equally well, but outperforms when the assumptions are not met. Ruxton (2006) argues that the "unequal variance t-test should always be used in preference to the Student's t-test" (Note: what he means by "always" is assuming normality of distribution)

Also note that R uses Welch's t-test as the default for the t.test() function.

Independent (unpaired) or dependent (paired) samples?

Paired t-test compares the same subjects at 2 different times . Unpaired t-test compares two different subjects.

Samples are independent (unpaired) if one measurement is taken on different groups. For example in medical treament, group A is a control group, and is given a placebo with no medical effect. Group B is a test group, and receives a prescribed treatment with expected medical effect. Health check is applied on two groups, and the measurements are recorded. We say that the measurement from group A is independent from that of group B.

Samples are dependent (paired) when repeated measures are taken on the same or related subjects. For example, there may be instances of the same patients being tested repeatedly - before and after receiving a particular treatment. In such cases, each patient is being used as a control sample against themselves. This method also applies to cases where the samples are related in some manner or have matching characteristics, like a comparative analysis involving children, parents or siblings.

If you have a reason to believe that samples are correlated in any ways, it is recommended to use dependent test to reduce the effect of confounding factors.

4.3. Confidence interval of variance¶

Confidence interval of variance is used to estimate the population variance from sample data and quantify the related uncertainty. C.I. of variance is seldom used by itself, but rather used in conjunction with f-test, which tests equality of variances of different populations. Similar to how the confidence interval of difference in mean forms the foundation of t-test, C.I. of variance forms the foundation of f-test. In the field of statistics and machine learning, the equality of variance is an important assumption when choosing which technique to use. For example, when comparing the means of two samples, student's t-test should not be used when you have a reason to believe that the two samples have different variances. Personally, I found f-test to be useful for the purpose of reading and understanding scientific papers, as many of the papers I have read use f-test to test their hypothesis, or use a variation of f-test for more advanced techniques. It is a pre-requisite knowledge you need to know to understand the more advanced techniques.

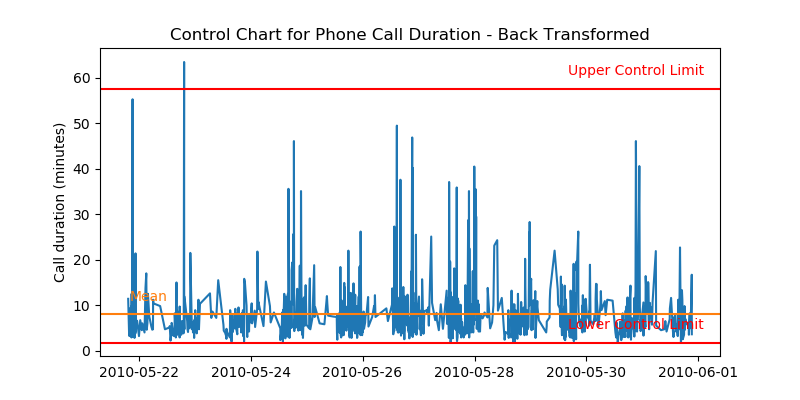

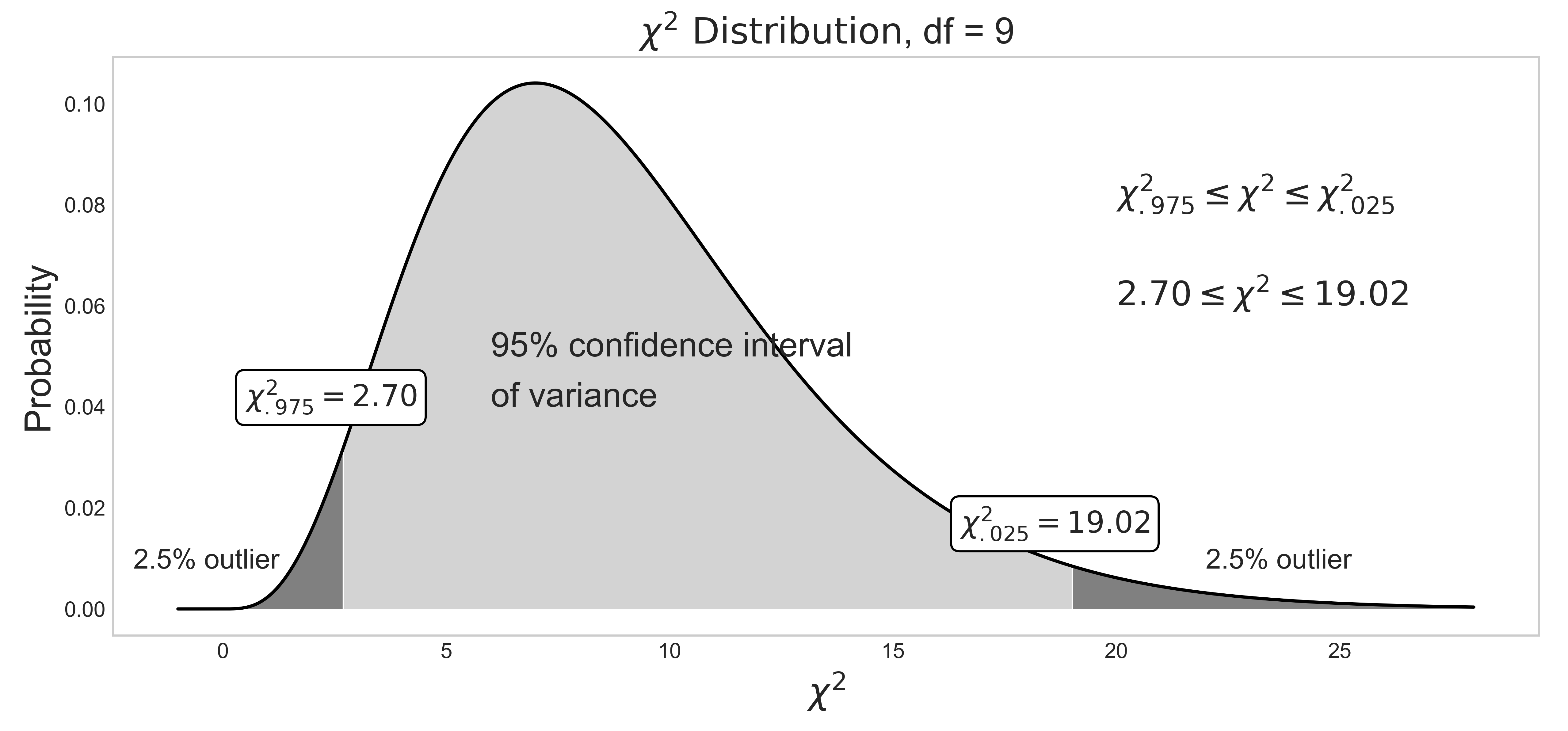

I mentioned that different statistics exhibit different distributions above. When a sample data set originates from a normal distribution, its sample means are normally distributed as shown in figure (7). On the other hand, its sample variances are chi-square ($\chi^2$) distributed as shown in figure (10) The curve is asymptotic, and never touches the x-axis. The cumulative probabilty, which is often referred to as "p-value" in hypothesis testing, propagates from the right (p-value=0) to the left (p-value=1). For example, $\chi^2_{.975}=2.70$ is in the lower/left-tail and $\chi^2_{.025} = 19.02$ is in the upper/right-tail. When the samples follow a normal distribution, the $\chi^2$ statistic values can be plugged into eq (10) to compute the confidence interval of variance.

Figure 10: 95% confidence interval of variance.

Source Code For Figure (10)

from scipy import stats

import matplotlib.pyplot as plt

import numpy as np

df = 9

x = np.linspace(-1, 28, 1000)

y = stats.chi2.pdf(x, df, loc=0, scale=1)

right_tail = stats.chi2.ppf(1 - 0.025, df)

left_tail = stats.chi2.ppf(1 - 0.975, df)

plt.style.use('seaborn-whitegrid')

fig, ax = plt.subplots(figsize=(12, 5))

ax.plot(x, y, c='black', label='Degrees of freedom = %d' % df)

ax.set_xlabel('$\chi^2$', fontsize=17)

ax.set_ylabel(r'Probability', fontsize=17)

ax.set_title(r'$\chi^2\ \mathrm{Distribution}$, df = %d' % df, fontsize=17)

ax.fill_between(x, 0, y, where=(np.array(x) > min(x)) & (np.array(x) <= left_tail), facecolor='grey')

ax.fill_between(x, 0, y, where=(np.array(x) > left_tail) & (np.array(x) < right_tail), facecolor='lightgrey')

ax.fill_between(x, 0, y, where=(np.array(x) > right_tail) & (np.array(x) <= max(x)), facecolor='grey')

ax.grid(False)

ax.text(22, 0.008, '2.5% outlier', fontsize=13)

ax.text(-2, 0.008, '2.5% outlier', fontsize=13)

ax.text(0.5, 0.04, '$\chi^2_{.975} = %.2f$' % left_tail, fontsize=14, bbox=dict(boxstyle='round', facecolor='white'))

ax.text(16.5, 0.015, '$\chi^2_{.025} = %.2f$' % right_tail, fontsize=14, bbox=dict(boxstyle='round', facecolor='white'))

ax.text(20, 0.08, '$\chi^2_{.975} \leq \chi^2 \leq \chi^2_{.025}$', fontsize=16)

ax.text(20, 0.06, '$2.70 \leq \chi^2 \leq 19.02$', fontsize=16)

ax.text(6, 0.05, '95% confidence interval', fontsize=16)

ax.text(6, 0.04, 'of variance', fontsize=16);

In confidence interval of variance, the degrees of freedom is:

Recall that the goal of any confidence interval is to estimate the population parameter from a fraction of its samples due to the high cost of obtaining measurement data of the entire data set, as explained in Population vs Samples. You attempt to estimate the population variance $\sigma^2$ within the range of uncertainty with the sample variance $s^2$ obtained from a set of n samples that are "hopefully" representative of the true population.

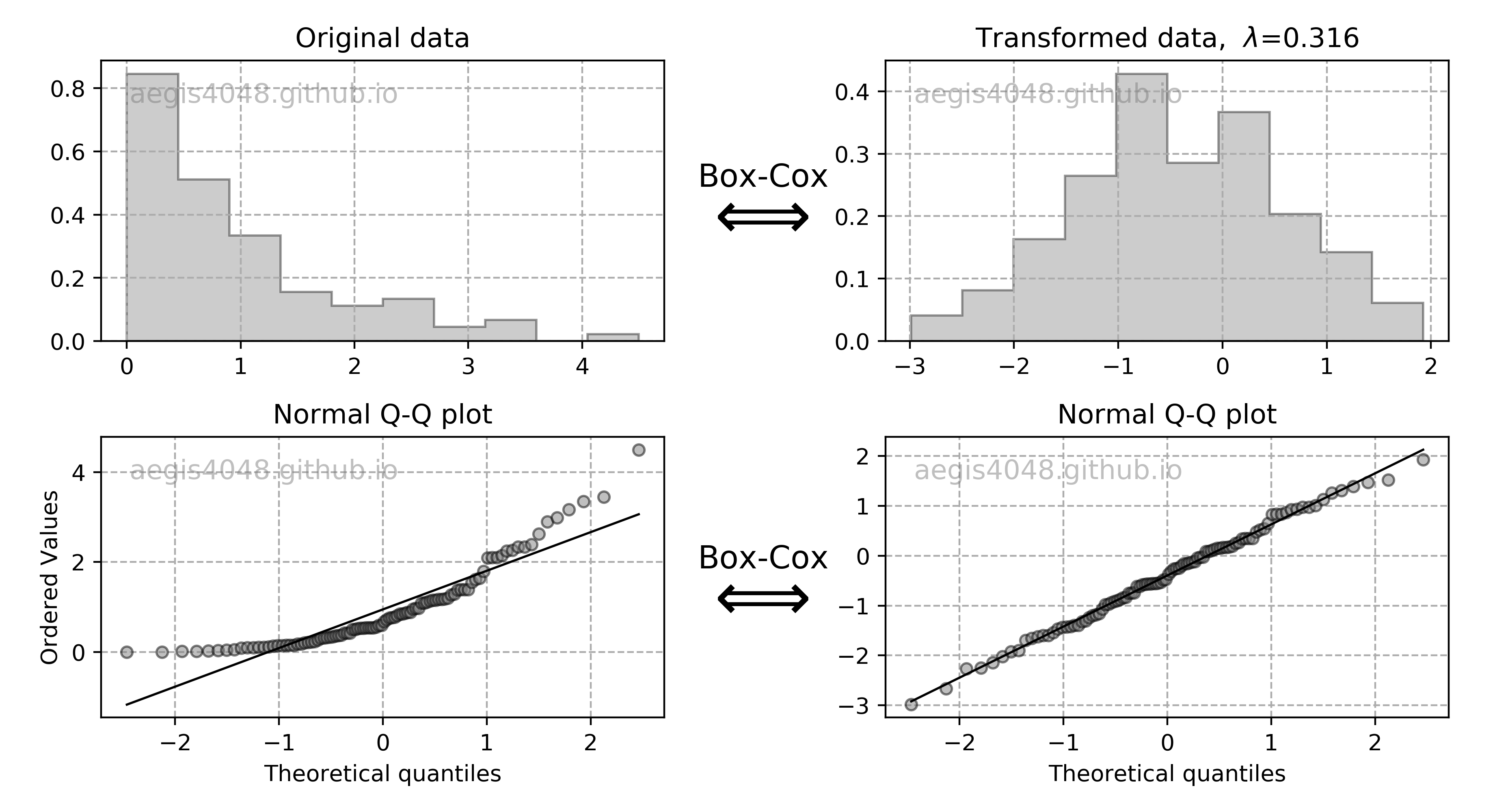

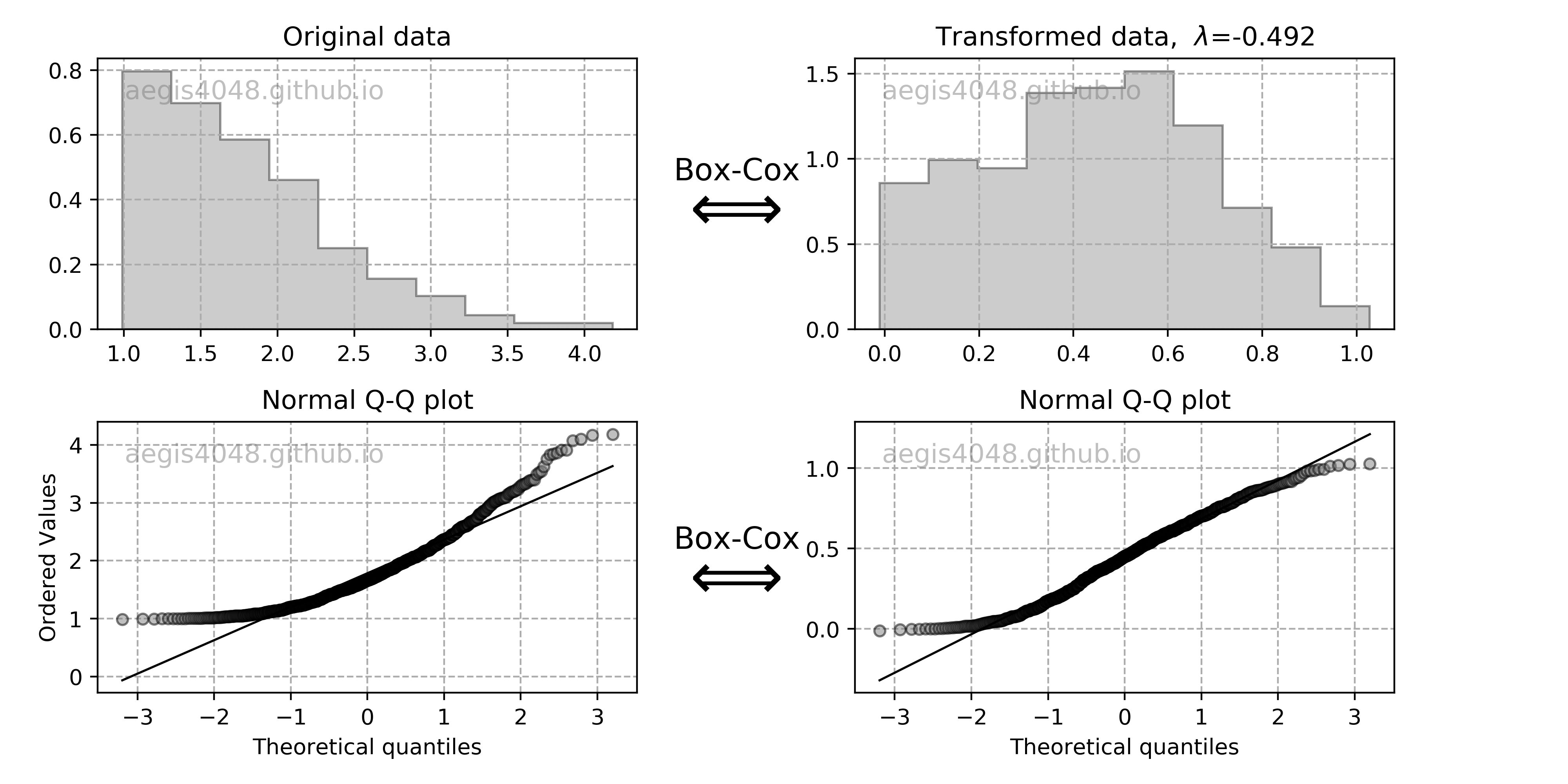

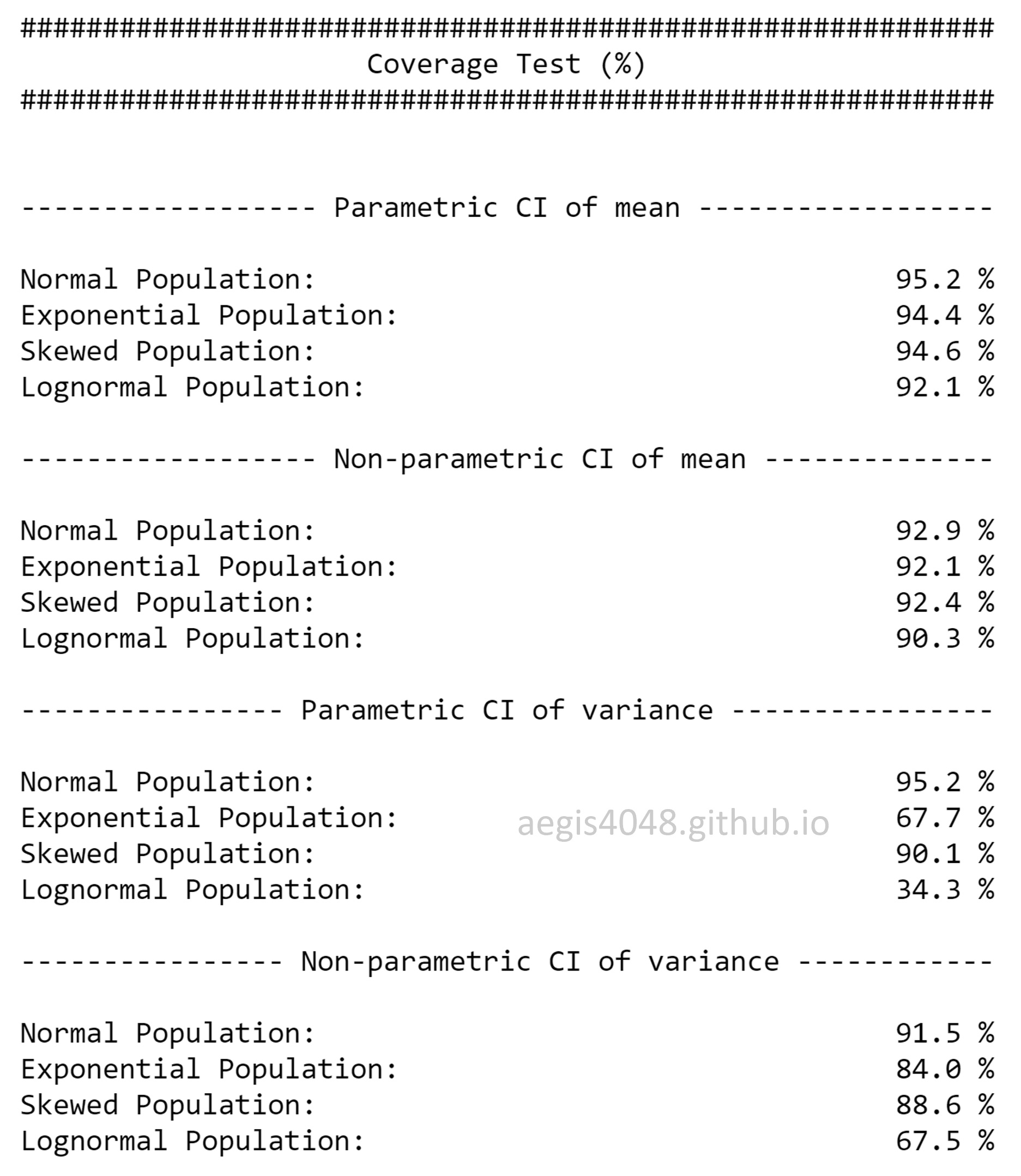

Confidence interval of variance assumes normality of samples, and is very sensitive to the sample distribution's deviation from normality. In case of non-normal sample distributions, you can either 1) transform the distribution to normal distribution with Box-Cox transformation, or 2) use non-parametric alternatives. For practitioners, I do not recommend 1) unless you really understand what you are doing, as the back transformation process of Box-Cox transformation can be tricky. Furthermore, it doesn't always result in successful transformation of non-normal to normal distribution, as discussed below. I recommend to use 2). If you have non-normal samples and your goal is to compute the C.I. of variance, use bootstrap. If your goal is to check the equality of variances of multiple sample data sets with hypothesis testing, use Levene's test. Both are the non-parametric alternatives that does not require normality of samples.

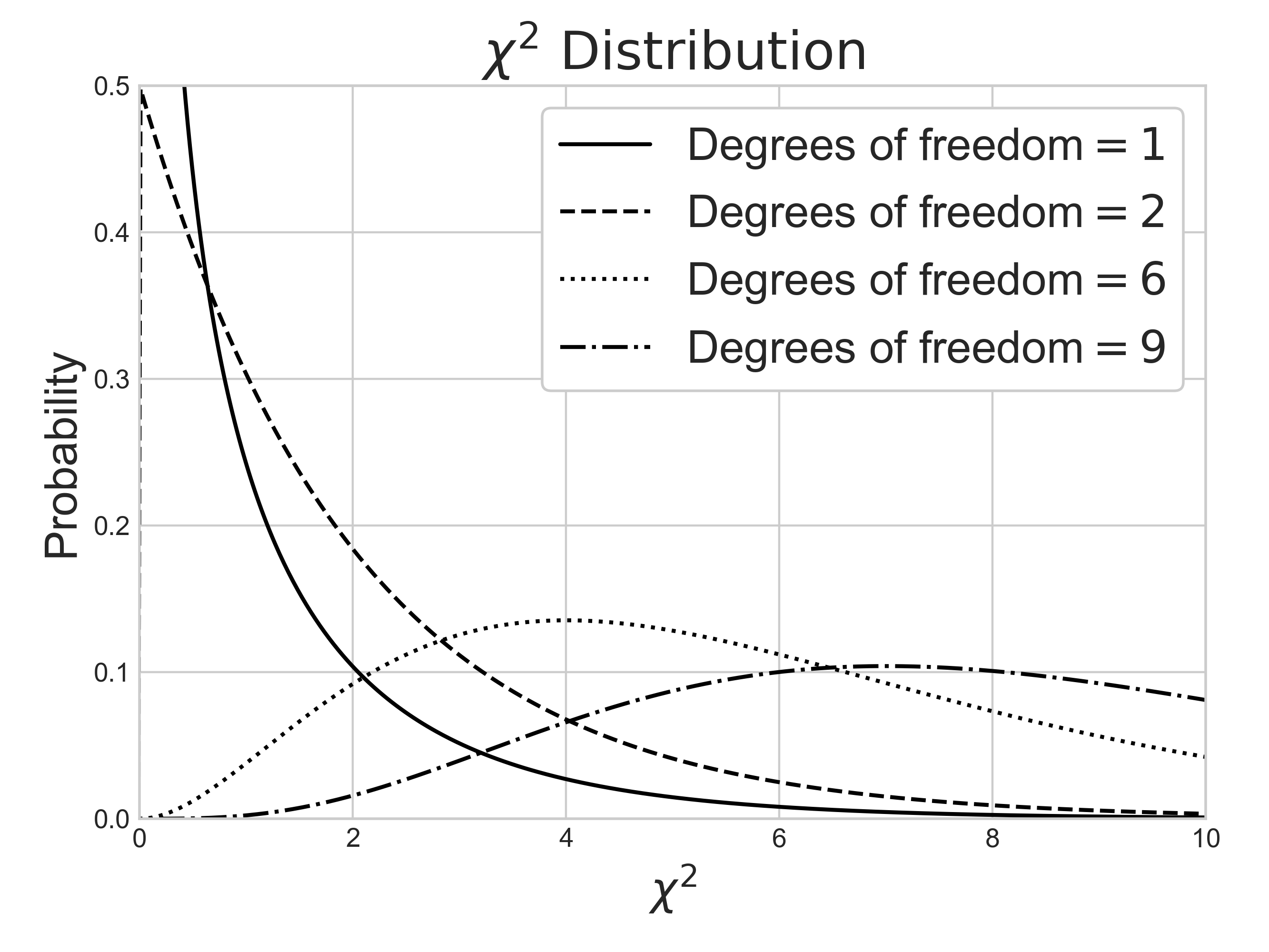

Notes: Chi-square $\chi^2$ distribution

Chi-square $\chi^2$ distribution is a function of degrees of freedom $df$. It is a special case of the gamma distribution and is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing or in construction of confidence intervals.

It is used in the common chi-square goodness of fit test of an observed data set to a theoretical one. Let's say that there's a company that prints baseball cards. The company claims that 30% of the cards are rookies, 60% veterans but not All-Stars, and 10% are veteran All-Stars. Suppose that you purchased a deck of 100 cards. You found out that the card deck has 50 rookies, 45 veterans, and 5 All-Stars. Is this consistent with the company's claim? An answer to this question is explained in detail here using the chi-squared goodness of fit test. Note that the chi-square goodness of fit test does NOT require normality of data, but the chi-square test that checks if a variance equals a specified value DOES require normality of data.

When samples have a normal distribution, some of their statistics can be described by $\chi^2$ distributions. For example, the Mahalanobis distance follows $\chi^2$ distribution when samples are normally distributed, and can be used for multivariate outlier detection using $\chi^2$ hypothesis test. Variance of samples also follows $\chi^2$ distributions when samples are normally distributed, and can be used to construct the confidence interval of variances with eq (10).

By the central limit theorem, a $\chi^2$ distribution converges to a normal distribution for large sample size $n$. For many practical purposes, for $n$ > 50 the distribution is sufficiently close to a normal distribution for the difference to be ignored. Note that the sampling distribution of $ln(\chi^2)$ converges to normality much faster than the sampling distribution of $\chi^2$ as the logarithm removes much of the asymmetry.

Source Code For The Figure

from scipy import stats

import matplotlib.pyplot as plt

import numpy as np

df_values = [1, 2, 6, 9]

linestyles = ['-', '--', ':', '-.']

x = np.linspace(-1, 20, 1000)

plt.style.use('seaborn-whitegrid')

fig, ax = plt.subplots(figsize=(6.6666666, 5))

fig.tight_layout()

plt.subplots_adjust(left=0.09, right=0.96, bottom=0.12, top=0.93)

for df, ls in zip(df_values, linestyles):

ax.plot(x, stats.chi2.pdf(x, df, loc=0, scale=1),

ls=ls, c='black', label=r'Degrees of freedom$=%i$' % df)

ax.set_xlim(0, 10)

ax.set_ylim(0, 0.5)

ax.set_xlabel('$\chi^2$', fontsize=14)

ax.set_ylabel(r'Probability', fontsize=14)

ax.set_title(r'$\chi^2\ \mathrm{Distribution}$')

ax.legend(loc='best', fontsize=11, framealpha=1, frameon=True)

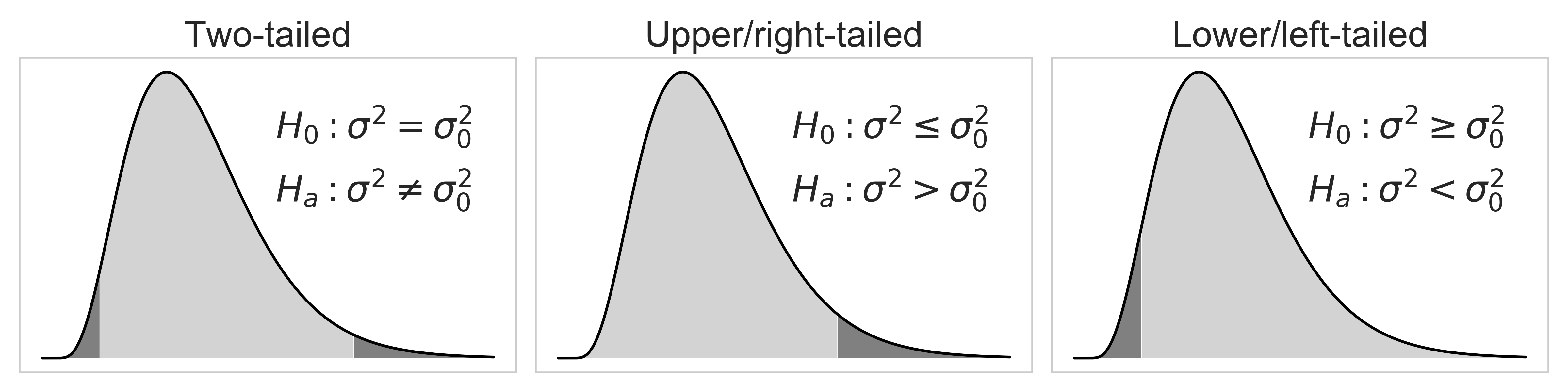

Notes: One-tail vs two-tail

As you explore more about the field of statistics, you will encounter many scientific papers or articles using mostly upper/right-tailed f-test, instead of two-tailed or lower/left-tailed f-test. Why? That's because they don't have much practical use in real-life. This information is little beyond the scope of this article, but I still want to touch on it because the C.I. of variance forms the foundation of f-test.

When it comes to the test of variances, we often want to maintain a low variance than high variance, because the high variance is often related to high risk or instability. We are usually interested in knowing if a target population variance $\sigma^2$ is lower than a specified value $\sigma^2_0$, not the other way around. This can be doen by using the upper/right-tailed hypothesis test, which is shown in the middle plot below. If the calculated statistic for f-test falls within the dark grey area, you reject your null hypothesis $H_0$, and accept the alternate hypothesis $H_a$

Source Code For The Figure

from scipy import stats

import matplotlib.pyplot as plt

import numpy as np

df = 9

x = np.linspace(-1, 28, 1000)

y = stats.chi2.pdf(x, df, loc=0, scale=1)

# two-tailed

two_right_tail = stats.chi2.ppf(1 - 0.025, df)

two_left_tail = stats.chi2.ppf(1 - 0.975, df)

# one tailed

one_right_tail = stats.chi2.ppf(1 - 0.05, df)

one_left_tail = stats.chi2.ppf(1 - 0.95, df)

plt.style.use('seaborn-whitegrid')

fig, axes = plt.subplots(1, 3, figsize=(12, 3))

for ax in axes:

ax.plot(x, y, c='black')

ax.grid(False)

ax.xaxis.set_major_formatter(plt.NullFormatter())

ax.yaxis.set_major_formatter(plt.NullFormatter())

axes[0].fill_between(x, 0, y, where=(np.array(x) > min(x)) & (np.array(x) <= two_left_tail), facecolor='grey')

axes[0].fill_between(x, 0, y, where=(np.array(x) > two_left_tail) & (np.array(x) < two_right_tail), facecolor='lightgrey')

axes[0].fill_between(x, 0, y, where=(np.array(x) > two_right_tail) & (np.array(x) <= max(x)), facecolor='grey')

axes[0].set_title('Two-tailed', fontsize=20)

axes[0].text(14, 0.08, r'$H_0: \sigma^2 = \sigma_0^2$', fontsize=20)

axes[0].text(14, 0.057, r'$H_a: \sigma^2 \neq \sigma_0^2$', fontsize=20)

axes[1].fill_between(x, 0, y, where=(np.array(x) > min(x)) & (np.array(x) < one_right_tail), facecolor='lightgrey')

axes[1].fill_between(x, 0, y, where=(np.array(x) > one_right_tail) & (np.array(x) <= max(x)), facecolor='grey')

axes[1].set_title('Upper/right-tailed', fontsize=20)

axes[1].text(14, 0.08, r'$H_0: \sigma^2 \leq \sigma_0^2$', fontsize=20)

axes[1].text(14, 0.057, r'$H_a: \sigma^2 > \sigma_0^2$', fontsize=20)

axes[2].fill_between(x, 0, y, where=(np.array(x) > min(x)) & (np.array(x) <= one_left_tail), facecolor='grey')

axes[2].fill_between(x, 0, y, where=(np.array(x) > one_left_tail) & (np.array(x) <= max(x)), facecolor='lightgrey')

axes[2].set_title('Lower/left-tailed', fontsize=20)

axes[2].text(14, 0.08, r'$H_0: \sigma^2 \geq \sigma_0^2$', fontsize=20)

axes[2].text(14, 0.057, r'$H_a: \sigma^2 < \sigma_0^2$', fontsize=20)

fig.tight_layout()

Pythonic Tip: Computing confidence interval of variance

Unfortunately, there's no Python or R library that computes the confidence interval of variance. The fact that the pre-built function does not exist both in Python and R suggests that the C.I. of variance is seldom used. But as I mentioned before, the reason that I introduce the C.I. of variance is because it forms the foundation of f-test, a statistical hypothesis test that is widely used in scientific papers.

The C.I. of variance can be manually computed with eq (10). Don't forget to compute sample variance, instead of population variance by setting ddof=1 as explained above.

from scipy import stats

import numpy as np

arr = [8.69, 8.15, 9.25, 9.45, 8.96, 8.65, 8.43, 8.79, 8.63]

alpha = 0.05 # significance level = 5%

n = len(arr) # sample sizes

s2 = np.var(arr, ddof=1) # sample variance

df = n - 1 # degrees of freedom

upper = (n - 1) * s2 / stats.chi2.ppf(alpha / 2, df)

lower = (n - 1) * s2 / stats.chi2.ppf(1 - alpha / 2, df)

(lower, upper)

The output suggests that the 95% confidence interval of variance is — $\text{C.I.}_{variance}: \,\, 0.072 < \sigma^2 < 0.582$

4.4. Confidence interval of other statistics: Bootstrap¶

(Note: For those people who have web-development experience, this is not CSS Bootstrap.)

I mentioned that different formulas are used to construct confidence intervals of different statistics above. There are three problems with computing the confidence interval of statistics with analytical solutions:

Not all statistics have formulas for their confidence intervals

Their formulas can be so convoluted, that it may be better to use numerical alternatives

You have to memorize their formulas

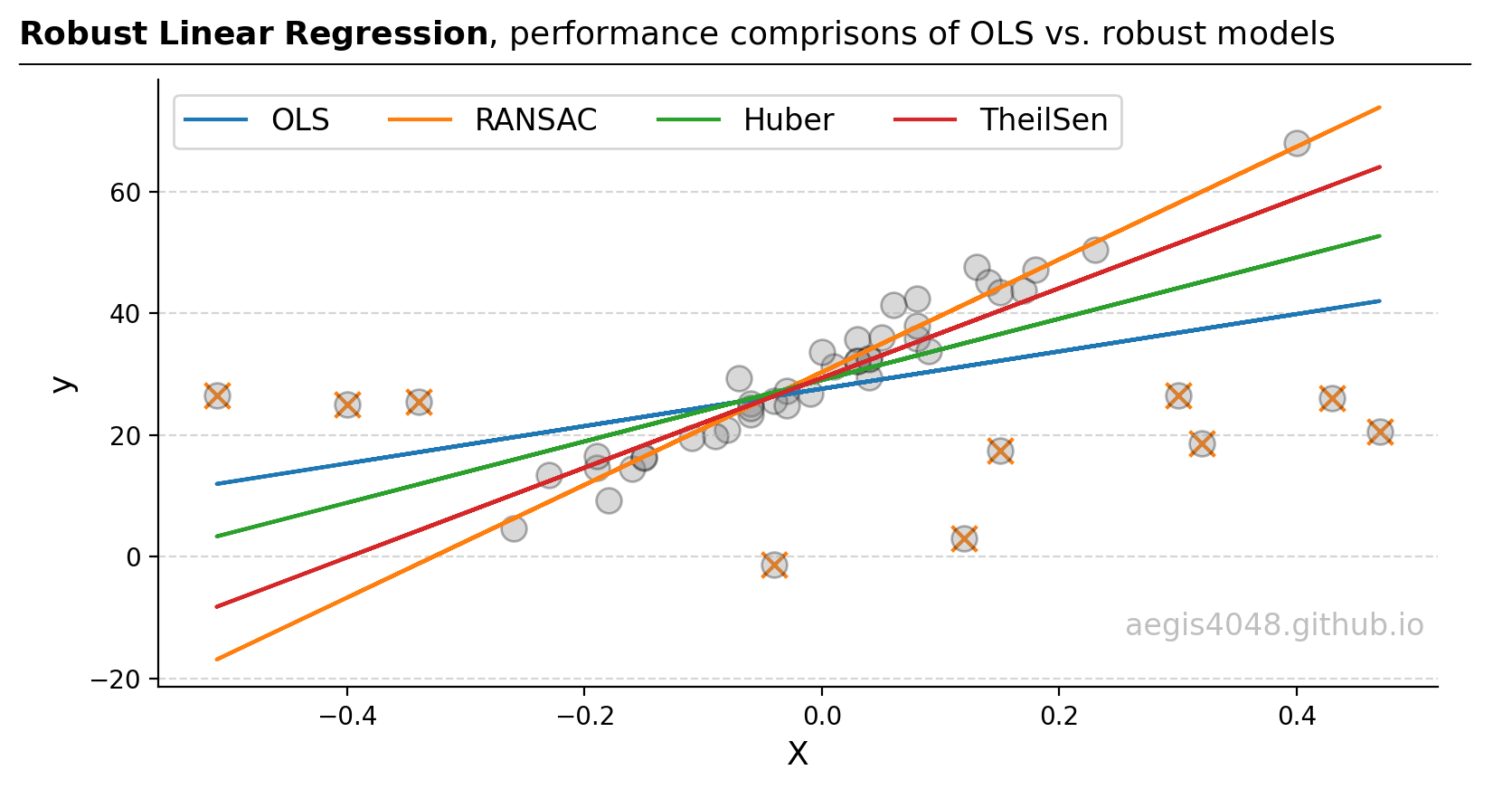

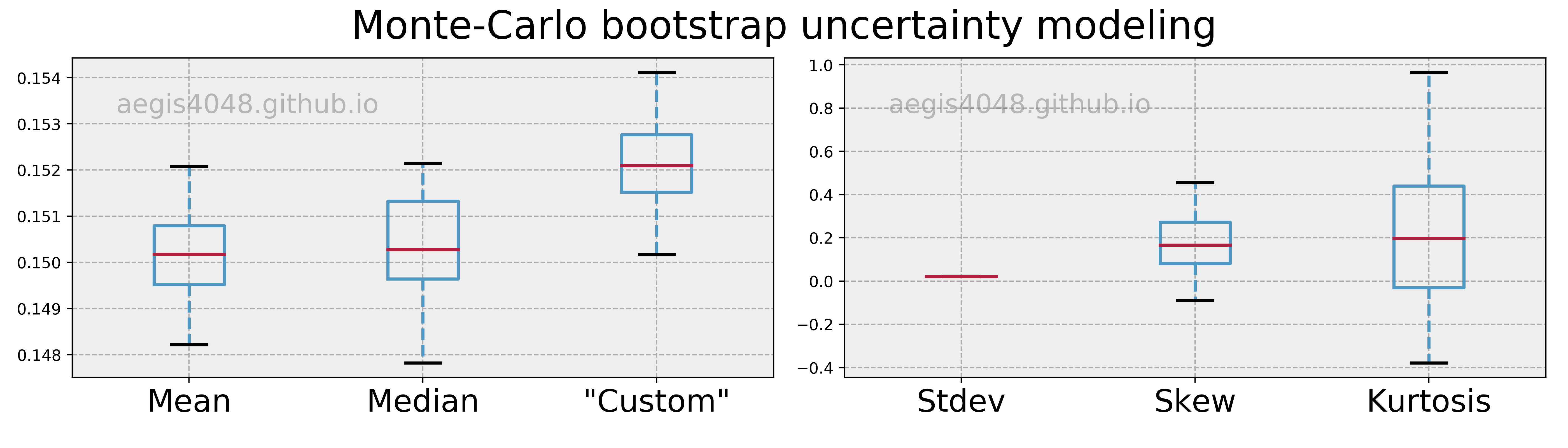

Bootstrapping is nice because it allows you to avoid these practical concerns. For example, there are no formulas to compute the confidence interval of covariance and median. On the other hand, regression coefficient has its own formula for its confidence interval, but the formulas get really messy in cases of multi-linear or non-linear regression. Wouldn't it be nice if there's a "magic" that saves you from all the math you have to worry about?

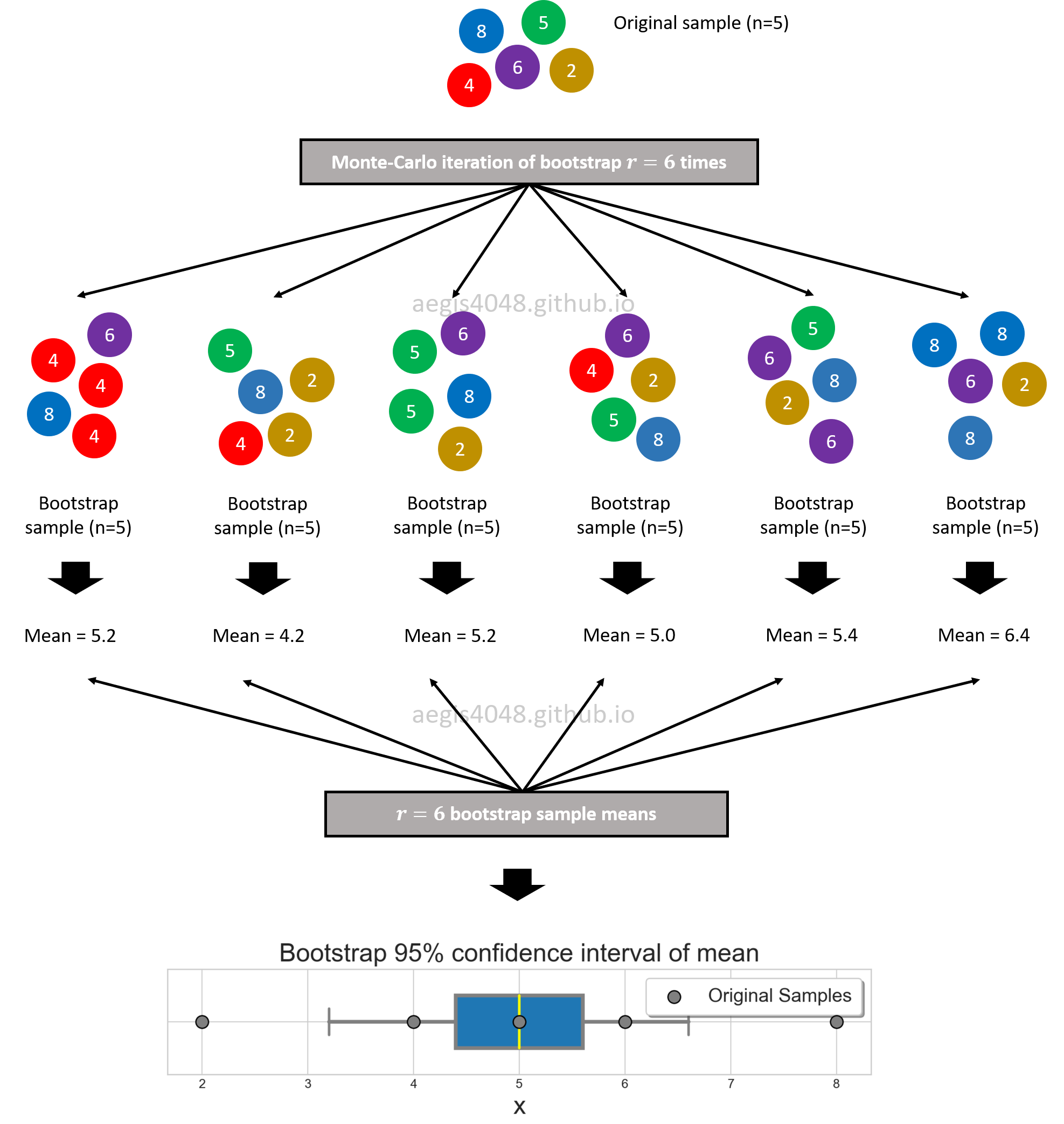

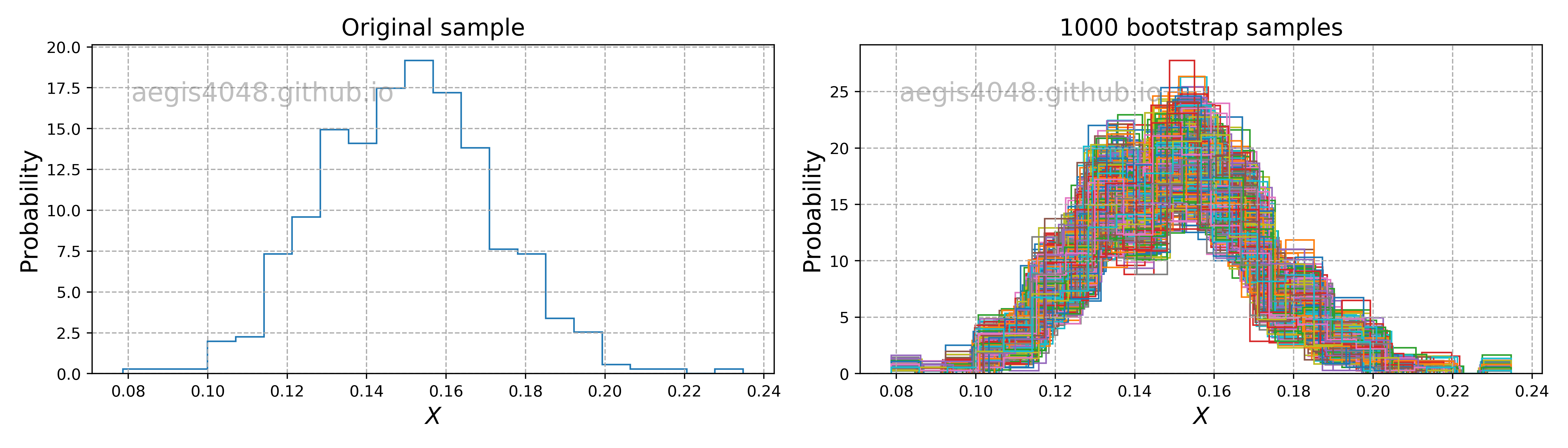

Bootstrapping is a statistical method for estimating the sampling distribution of a statistic by sampling with replacement from the original sample, most often with the purpose of estimating confidence intervals of a population parameter like a mean, median, proportion, correlation coefficient or regression coefficient.

Bootstrap can construct confidence intervals of any statistics when combined with Monte Carlo method. The process is visually shown in fig (?). Initially you have 5 samples $[8, 5, 4, 6, 2]$ that you collected from an unknown population. You randomly draw $n=5$ samples from the original sample pool WITH REPLACEMENT, and they become your single bootstrap sample. You repeat this process $r=6$ times to collect multiple bootstrap samples. For each bootstrap sample, you run your functions to compute the statistic of your interest: in this case, np.mean(single_boot). Now you have $r=6$ sample means obtained from $r$ bootstrap samples. You can construct 95% confidence interval of mean with percentile method: np.percentile(mutliple_boot_means, 97.5), np.percentile(mutliple_boot_means, 2.5)

Note that the bootstraped samples will contain duplicate elements a lot, due to random sampling WITH REPLACEMENT. This causes problems with bootstrapping regression models, as explained below.

Figure 11: Bootstrap 95% confidence interval of mean.

Source Code For Figure (11)

import matplotlib.pyplot as plt

import numpy as np

np.random.seed(42)

arr = [8, 5, 4, 6, 2]

####################### Bootstrap #######################

num_boot_samples = 1000

def estimator(l):

# statistic of interest; Ex: mean, median, variance ...

return np.mean(l)

boot = [estimator(np.random.choice(arr, len(arr))) for _ in range(num_boot_samples)]

#########################################################

plt.style.use('seaborn-whitegrid')

fig, ax = plt.subplots(figsize=(10, 4))

returns = ax.boxplot(boot, widths=0.5, whis=[2.5, 97.5], showfliers=False,

patch_artist=True,

boxprops=dict(linewidth=3.0, color='grey'),

whiskerprops=dict(linewidth=3.0, color='grey'), vert=False,

capprops=dict(linewidth=2.0, color='grey'),

medianprops=dict(linewidth=2.0, color='yellow'))

ax.set_aspect(1)

#ax.set_ylim(1.5, -.5)

ax.set_xlabel(r'$\bar{X}$', fontsize=20)

ax.yaxis.set_major_formatter(plt.NullFormatter())

ax.set_title('Bootstrap 95% confidence interval of mean', fontsize=20)

ax.scatter(arr, [1, 1, 1, 1, 1], facecolors='grey', edgecolors='k', zorder=10, label='Original Samples', s=100)

ax.legend(fontsize=15, fancybox=True, framealpha=1, shadow=True, borderpad=0.5, frameon=True);

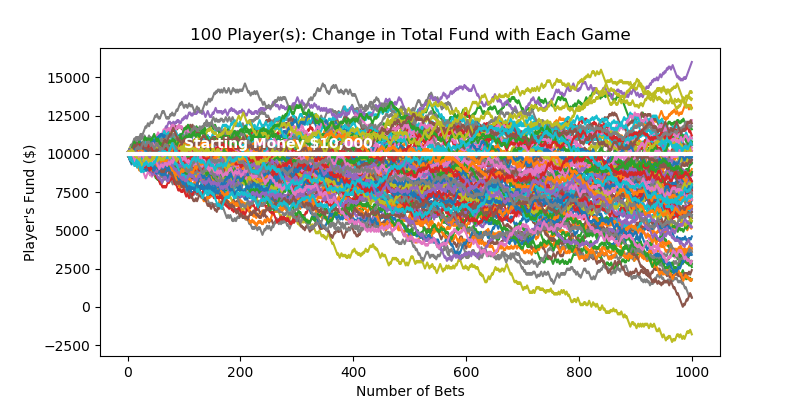

Notes: Monte-Carlo method

During your study of statistics, there's a good chance that you've heard of the word, "Monte-Carlo". It refers to the process that relies on repeated generation of random numbers to investigate some characteristic of a statistic which is hard to derive analytically. The process is composed of mainly two parts: random number generator, and for-loop. The random number generator can be parametric, or non-parametric. In case of parametric simulation, you must have some previous knowledge about the population of your interest, such as its shape. In the below code snippet, you assume that the sample is from a specific distribution: normal, lognormal, chisquare. Then, you repeatedly randomly draw samples from the pre-defined distribution with a for-loop:

Parametric Monte-Carlo simulation

# random number generator = normal distribution

sim_1 = [np.random.normal(np.mean(sample), np.mean(sample)) for _ in range(iterations)]

# random number generator = lognormal distribution

sim_2 = [np.random.lognormal(np.mean(sample), np.mean(sample)) for _ in range(iterations)]

# random number generator = chi-square distribution

sim_3 = [np.random.chisquare(len(sample)) for _ in range(iterations)]

In case of non-parametric simulation, the random number generator does not assume anything about the shape of the population. Non-parmetric bootstrap would be the choice of your random number generator in this case (Note: some variations of bootstrap are parametric):

Non-Parametric Monte-Carlo simulation

# random number generator = non-parametric bootstrap

sim_4 = [np.random.choice(original_sample) for _ in range(iterations)]

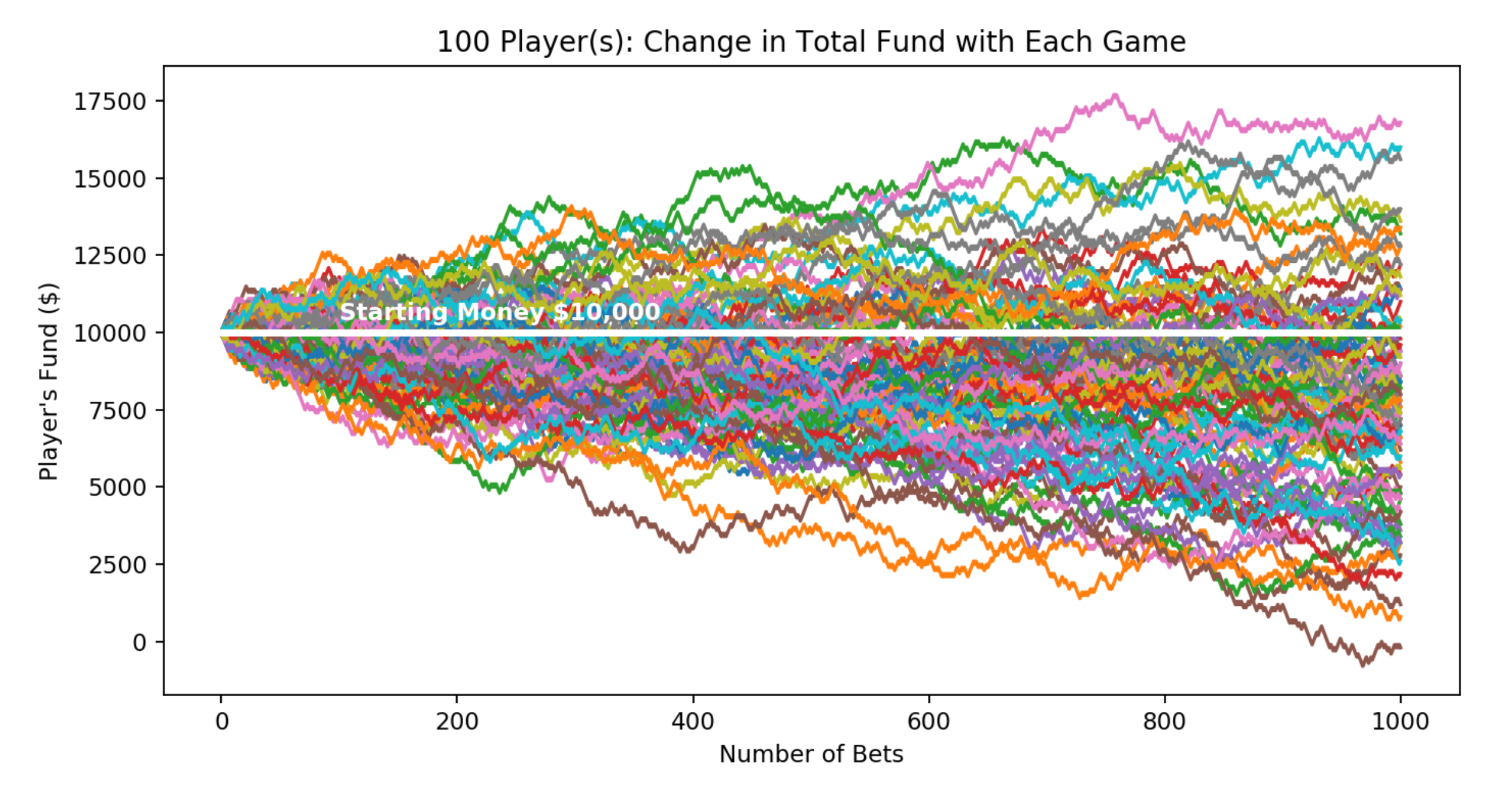

You can create multiple instances of sim_n objects above to experiment with your data set; you use Monte-Carlo simulations to produce hundreds or thousands of "possible outcomes". The results are analyzed to get probabilities of different outcomes occuring. The application of Monte-Carlo method includes constructing confidence interval of statistics with Bootstrap shown in figure (11), and profit modeling of casino dice roll games.

Why does bootstrapping work?

(Before you read this section, make sure you understand the difference between Population vs Samples.)

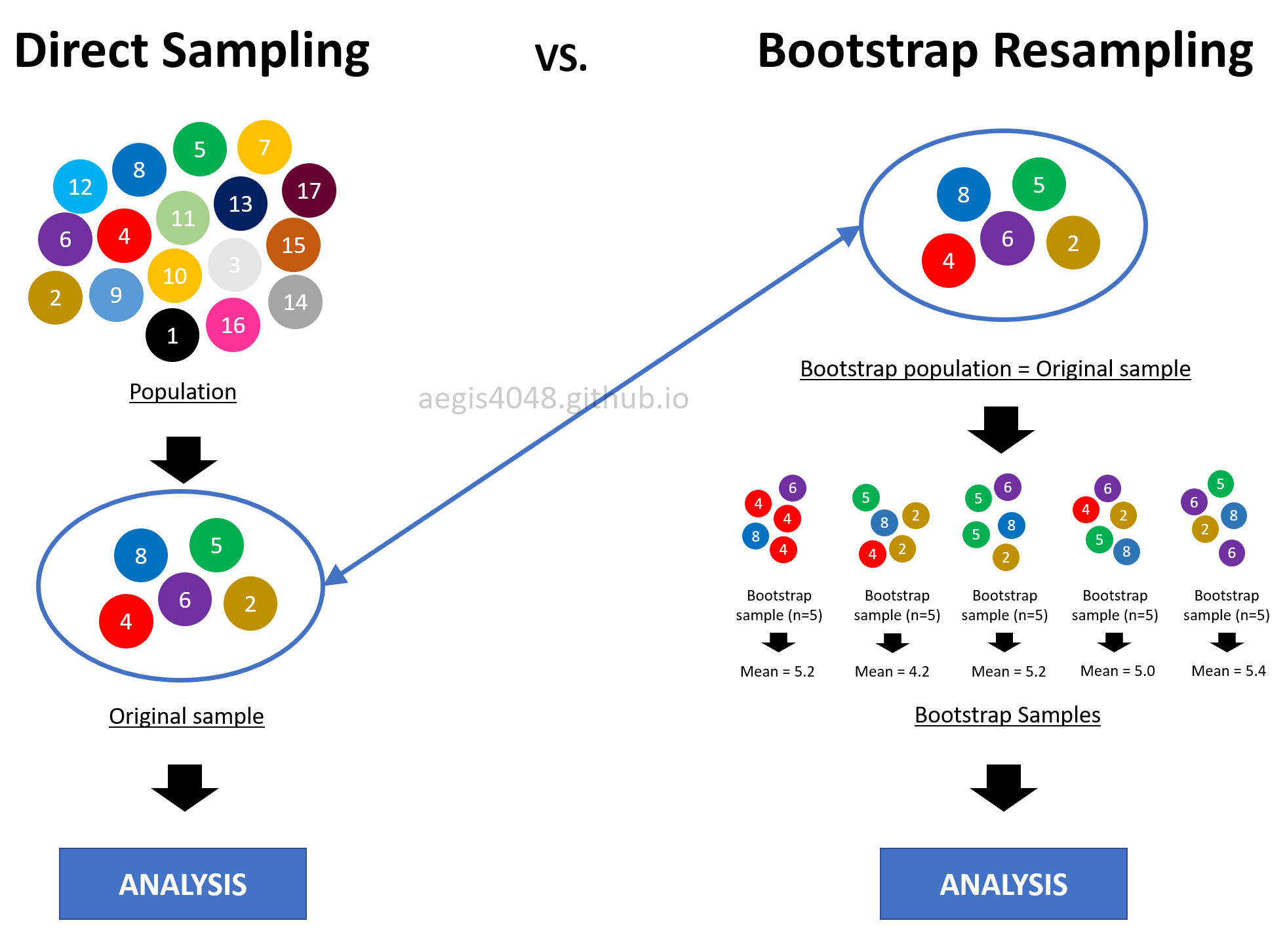

Practitioners wonder WHY bootstrapping works: why is it that resampling the same sample over and over gives good results? If we are resampling from our sample, how is it that we are learning something about the population rather than only about the sample? There seems to be a leap which is somewhat counter-intuitive. The idea comes from the assumption that the sample is a reasonable representation of its underlying population — the population is to the sample as the sample is to the bootstrap samples.

You want to ask question of a population, but you can't because you lack the resources to get measurement data of all possible data points. So you take a fraction of the population, a.k.a the sample, and ask the question of it instead. Now, how confident you should be that the sample answer is close to the population answer depends on how well the sample represents the underlying population. One way you might learn about this is to take samples from different portions of the population again and again. You ask the same question to the multiple samples you collected, and see the variability of the different sample answers to quantify the related uncertainty of your estimation. When this is not possible due to practical limitations, you make some assumptions about the population (ex: population is normally distributed), or use the information in the collected sample to learn about the population.

In bootstrapping, you treat the original sample (size=$n$) you randomly acquired from the population as if it's the population itself of size $n$. From the original sample that you treated to be the population, you randomly draw samples, each of size $n$, with replacement multiple times to simulate direct sampling from the original population. By doing so, you essentially imitate sampling different portions of the original population multiple times. This idea is shown in figure (12).

This is a reasonable thing to do for two reasons. First, the sample in your had is the best you've got, indeed the only informatoin you have about the population. Second, if the original sample is randomly chosen, it will look like the original population they came from. This means that the sample is a good representation of its underlying population. However, if its not a good representation of the population, bootstrap fails. In fact, there's not much you can do in the first place if your sample is biased.

Figure 12: Intuitive idea behind Bootstrapping

Assumptions and Limitations of Bootstrap

Bootstrapping is great because it saves you from the normality assumption of distributions and all the math you have to know to construct confidence intervals. However, just like many other techniques, bootstrap has its own caveats. While bootstrap is distribution-free, it is not assumption-free. The assumptions are listed in this section.

Please note that there is a humongous variety of the bootstrap procedures, each addressing the particular quirk in either the statistic, the sample size, the dependence, or whatever an issue with the bootstrap could be. I am not introducing all of them here as the in-depth technical discussion of bootstrap needs another devoted post, but I still want you to know some of the critical assumptions; I want you to know what you don't know, so that you can google later to learn in-depth.

A sample is a good representation of its underlying population

The fundamental principle of bootstrapping is that the original sample is a good representation of its underlying population. Bootstrapping resamples from the original samples. This means that if the original sample is biased, the resulting bootstrap samples will also be biased. However, this is a problem of not just bootstrapping, but all statistical techniques. There's not much you can do if the only piece of information you have about the population is corrupted, after all.

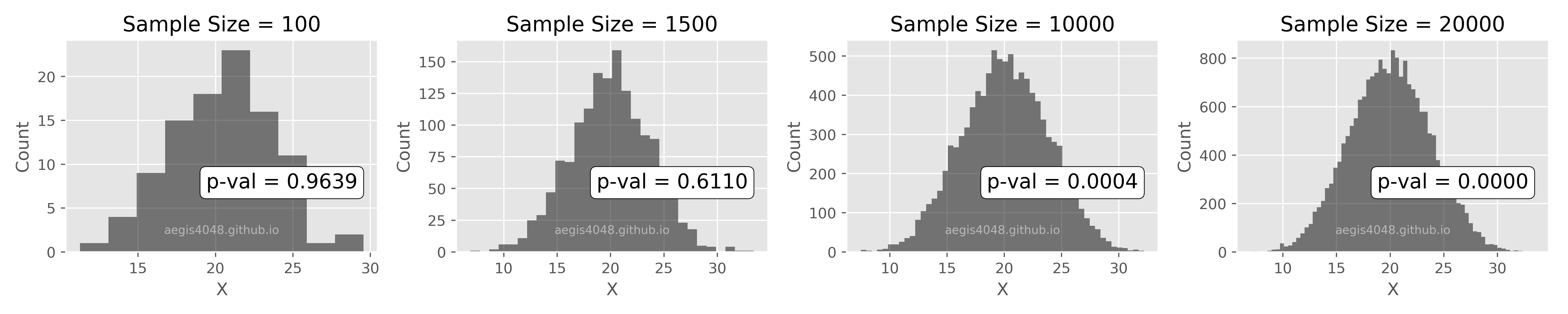

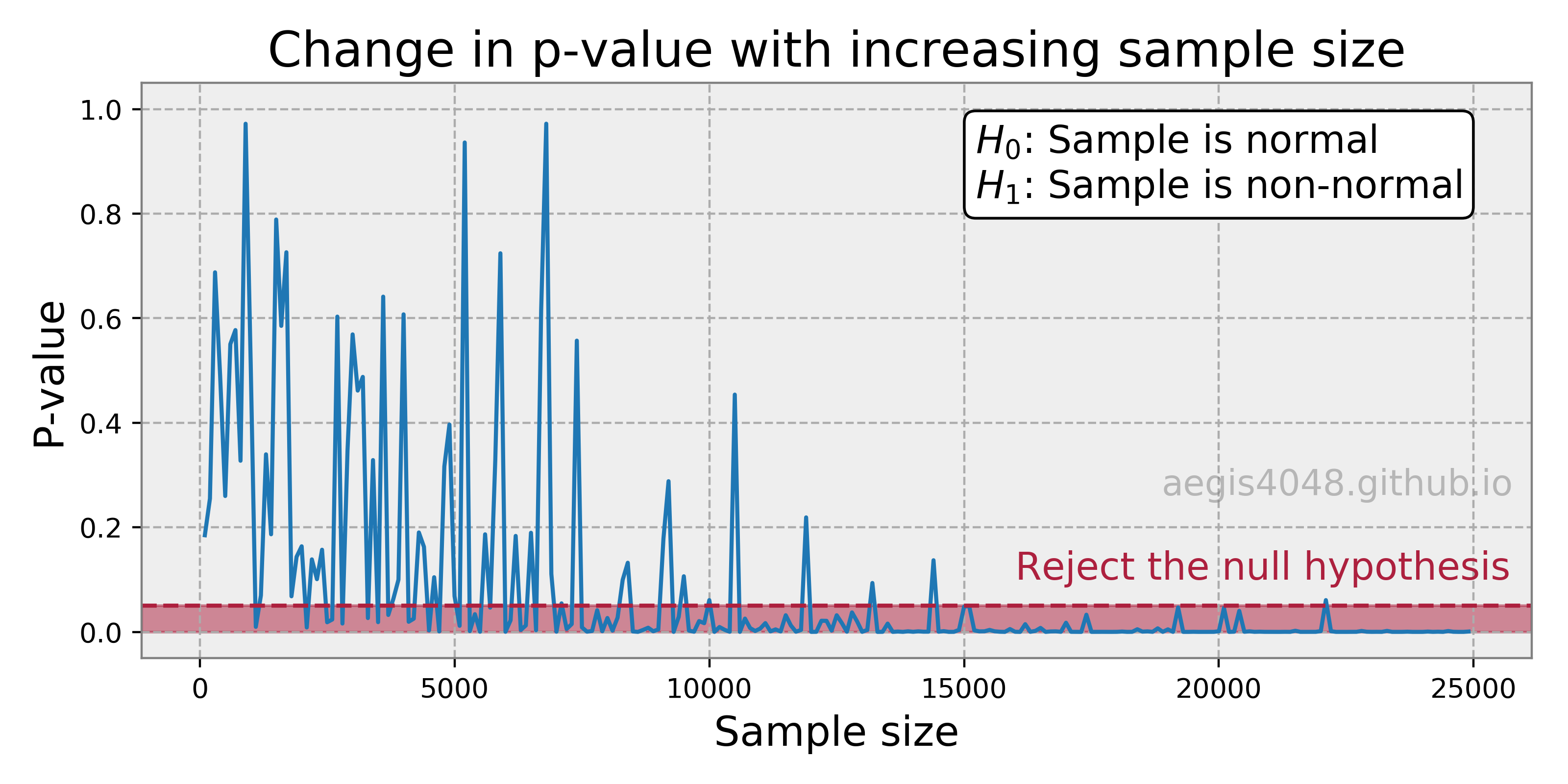

Insufficient samples make the bootstrap C.I. to be narrower than the analytical C.I.

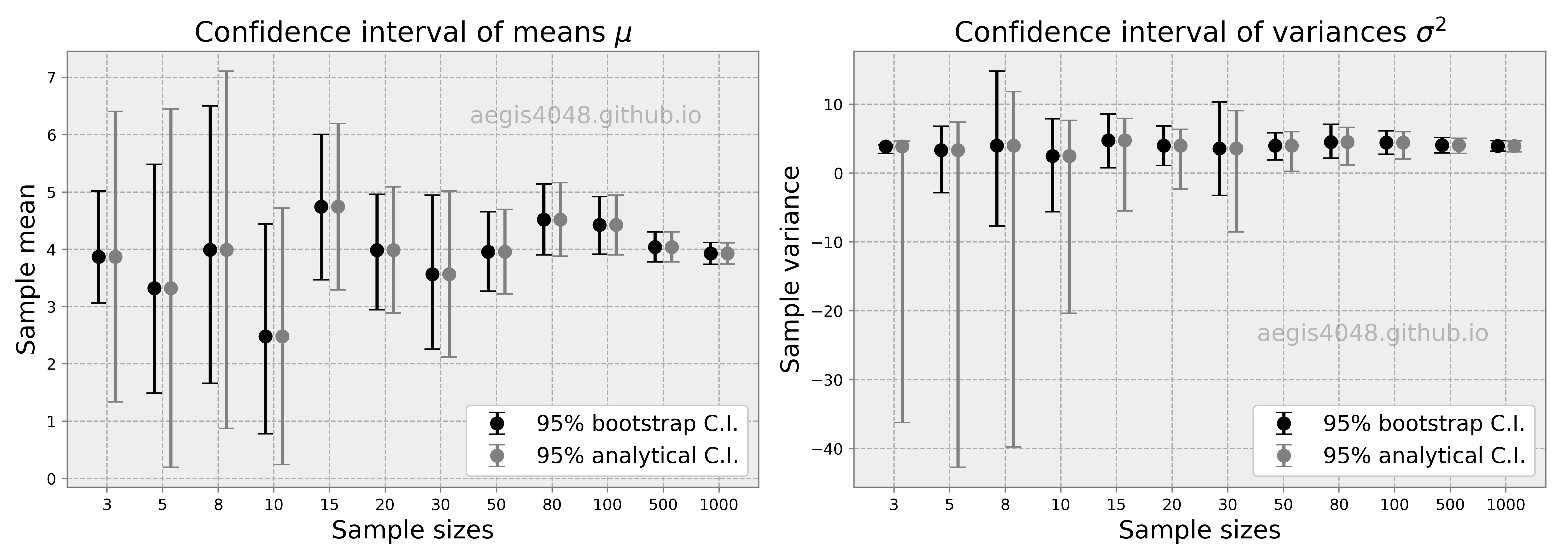

There's a myth in the field of statistics that bootstrap is a "cure" for small sample size. NO, it's not. First, if you have too small sample, by a high chance it is not diverse enough to represent all (reasonably) possible aspects of its population. Therefore, it is not a good representation of its population. Second, small sample size makes its bootstrap C.I. to be narrower than the analytical C.I.. This means that bootstrap C.I. reports small uncertainty even when the sample size is small. Not only this is counter-intuitive, but also it is a violation of the mathematic property of C.I. described by eq (1); small sample size $n$ in the denominator of eq (1) should give wider C.I.. But this is not true with bootstrap C.I. as shown in the below simulation result in figure (13).

Three things to note in the figure. First, the upper & lower error bars of bootstrap C.I. of means are asymmetric. This is because bootstrap C.I. is not based on $\pm$ standard error method. This is a very useful property to estimate the central tendency of asymmetric (skewed) populations. Second, both bootstrap and analytical C.I. become narrower with the increasing sample size. This intuitively and mathematically makes sense. Third, bootstap C.I. approximates the analytical C.I. very well with large sample size. This is perhaps the most important advantage of using bootstrap. If you have large sample size, you really don't have to worry anything else (except the indepence of samples), and just stick to bootstrap. All the disadvantages of bootstrap will be overcome by the large sample size.

One might wonder what is "large" enough in practical applications. Unfortunately, the definition of "large" is different for every applications. In the simulation result of figure (13), it seems that $n = 20$ falls in the category of "large" to approximate C.I. of the mean of a normally distributed population with bootstrap. On the other hand, $n=100$ seems to be "large" in case of C.I. of the variances of a normally distributed population. The definition of "large $n$" can vary with different applications (ex: non-normal data, C.I. of regression coefficient or covariance). Carefully investigate your samples to have a good definition of "large".

Figure 13: Comparison of Bootstrap vs Analytical C.I. for different sample sizes

Source Code For Figure (13)

import matplotlib.pyplot as plt

import numpy as np

from scipy import stats

sample_sizes = [3, 5, 8, 10, 15, 20, 30, 50, 80, 100, 500, 1000]

mean = 4 # sample mean

std = 3 # sample standard deviation

boot_iter = 10000 # bootstrap iterations

boot_mean_lo = np.array([])

boot_mean_hi = np.array([])

analy_mean_lo = np.array([])

analy_mean_hi = np.array([])

boot_var_lo = np.array([])

boot_var_hi = np.array([])

analy_var_lo = np.array([])

analy_var_hi = np.array([])

means = np.array([])

variances = np.array([])

for size in sample_sizes:

np.random.seed(size * 5)

arr = np.random.normal(mean, std, size) # randomly draw from a normal distribution

# analytical confidence interval of mean

means = np.append(means, np.mean(arr))

analy_conf_mean = stats.t.interval(1 - 0.05, len(arr) - 1, loc=np.mean(arr), scale=stats.sem(arr))

analy_mean_lo = np.append(analy_mean_lo, analy_conf_mean[0])

analy_mean_hi = np.append(analy_mean_hi, analy_conf_mean[1])

# bootstrap confidence interval of mean

boot_means = [np.mean(np.random.choice(arr, len(arr))) for _ in range(boot_iter)]

boot_mean_lo = np.append(boot_mean_lo, np.percentile(boot_means, 2.5))

boot_mean_hi = np.append(boot_mean_hi, np.percentile(boot_means, 97.5))

# analytical confidence interval of variance

variances = np.append(variances, np.var(arr, ddof=1))

analy_conf_var = (

(len(arr) - 1) * np.var(arr, ddof=1) / stats.chi2.ppf(1 - 0.05 / 2, len(arr) - 1),

(len(arr) - 1) * np.var(arr, ddof=1) / stats.chi2.ppf(0.05 / 2, len(arr) - 1)

)

analy_var_lo = np.append(analy_var_lo, analy_conf_var[0])

analy_var_hi = np.append(analy_var_hi, analy_conf_var[1])

# bootstrap confidence interval of variance

boot_vars = [np.var(np.random.choice(arr, len(arr)), ddof=1) for _ in range(boot_iter)]

boot_var_lo = np.append(boot_var_lo, np.percentile(boot_vars, 2.5))

boot_var_hi = np.append(boot_var_hi, np.percentile(boot_vars, 97.5))

# plotting

def styling(ax, xticks, xticklables):

ax.legend(fontsize=14, loc='lower right', framealpha=1, frameon=True)

ax.set_xlabel('Sample sizes', fontsize=16)

ax.set_facecolor('#eeeeee')

ax.grid(True, linestyle='--', color='#acacac')

ax.tick_params(color='grey')

ax.set_xticks(xticks)

ax.set_xticklabels([str(label) for label in xticklables])

_ = [spine.set_edgecolor('grey') for spine in ax.spines.values()]

x = np.array([i for i in range(len(sample_sizes))])

fig, axes = plt.subplots(1, 2, figsize=(14, 5))

axes[0].errorbar(x - 0.15, means, yerr=[abs(boot_mean_lo - means), abs(boot_mean_hi - means)],

fmt='o', label='95% bootstrap C.I.', color='k', markersize=8, capsize=5, linewidth=2)

axes[0].errorbar(x + 0.15, means, yerr=np.array([abs(analy_mean_hi - means), abs(analy_mean_lo - means)]),

fmt='o', label='95% analytical C.I.', color='grey', markersize=8, capsize=5, linewidth=2)

styling(axes[0], x, sample_sizes)

axes[0].set_ylabel('Sample mean', fontsize=16)

axes[0].set_title('Confidence interval of means $\mu$', fontsize=18)

axes[0].text(0.75, 0.85, 'aegis4048.github.io', fontsize=15, ha='center', va='center',

transform=axes[0].transAxes, color='grey', alpha=0.5);

axes[1].errorbar(x - 0.15, means, yerr=[abs(boot_var_lo - variances), abs(boot_var_hi - variances)],

fmt='o', label='95% bootstrap C.I.', color='k', markersize=8, capsize=5, linewidth=2)

axes[1].errorbar(x + 0.15, means, yerr=np.array([abs(analy_var_hi - variances), abs(analy_var_lo - variances)]),

fmt='o', label='95% analytical C.I.', color='grey', markersize=8, capsize=5, linewidth=2)

styling(axes[1], x, sample_sizes)

axes[1].set_ylabel('Sample variance', fontsize=16)

axes[1].set_title('Confidence interval of variances $\sigma^2$', fontsize=18)

axes[1].text(0.75, 0.35, 'aegis4048.github.io', fontsize=15, ha='center', va='center',

transform=axes[1].transAxes, color='grey', alpha=0.5);

fig.tight_layout()

Bootstrap fails to estimate extreme quantiles

Bootstrap fails to estimate some really weird statistics that depend on very small features of the data. For example, using bootstrapping to determine anything close to extreme values (ex: min, max) of a distribution can be unreliable. There are also problems with estimating extreme quantiles, like 1% or 99%. Note that bootstrapped 95% or 99% CI are themselves at tails of a distribution, and thus could suffer from such a problem, particularly with small sample sizes. Bootstrap works better in the middle of a distribution than at the tails, which makes bootstrapping the median to be robust, whereas bootstrapping the min or max to fail.

Samples are independent and identically distributed (i.i.d.)

Another central issue with bootrapping is, "does the resampling procedure preserve the structure of the original sample?" The greatest problem with bootstrapping dependent data is to create samples that have the dependence structures that are sufficiently close to those in the original data. Because it is impossible to preserve it with the naive bootstrap, a sample needs to be i.i.d.

This assumption raises a few practical issues when dealing with time series. First, by randomly sampling without constraints, naive bootstrap destroys the time-dependence structure in time series. In time series, all data points are aligned with respect to time, but random resampling does not respect their orders. Second, if there's an upward or downward trend in the means or variances, the trend will be lost due to random resampling. Third, because time series is essentially continuous samples of size 1 for each point in time, resampling a sample is equivalent to the original samples; one learns nothing by resampling. Therefore, resampling of a time series requires new ideas, such as block bootstrapping.

There are a few variations of bootstrap that attemtp to preserve the dependency structure of samples, which I will not introduce here due to their mathematical complexities. When using tehchniques based on random sampling, ensure that the samples are i.i.d., or use techniques that preserve (reasonably) the structure of the original data.

Bootstrap iteration (Monte-Carlo method) should be sufficient to reproduce consistent C.I's.

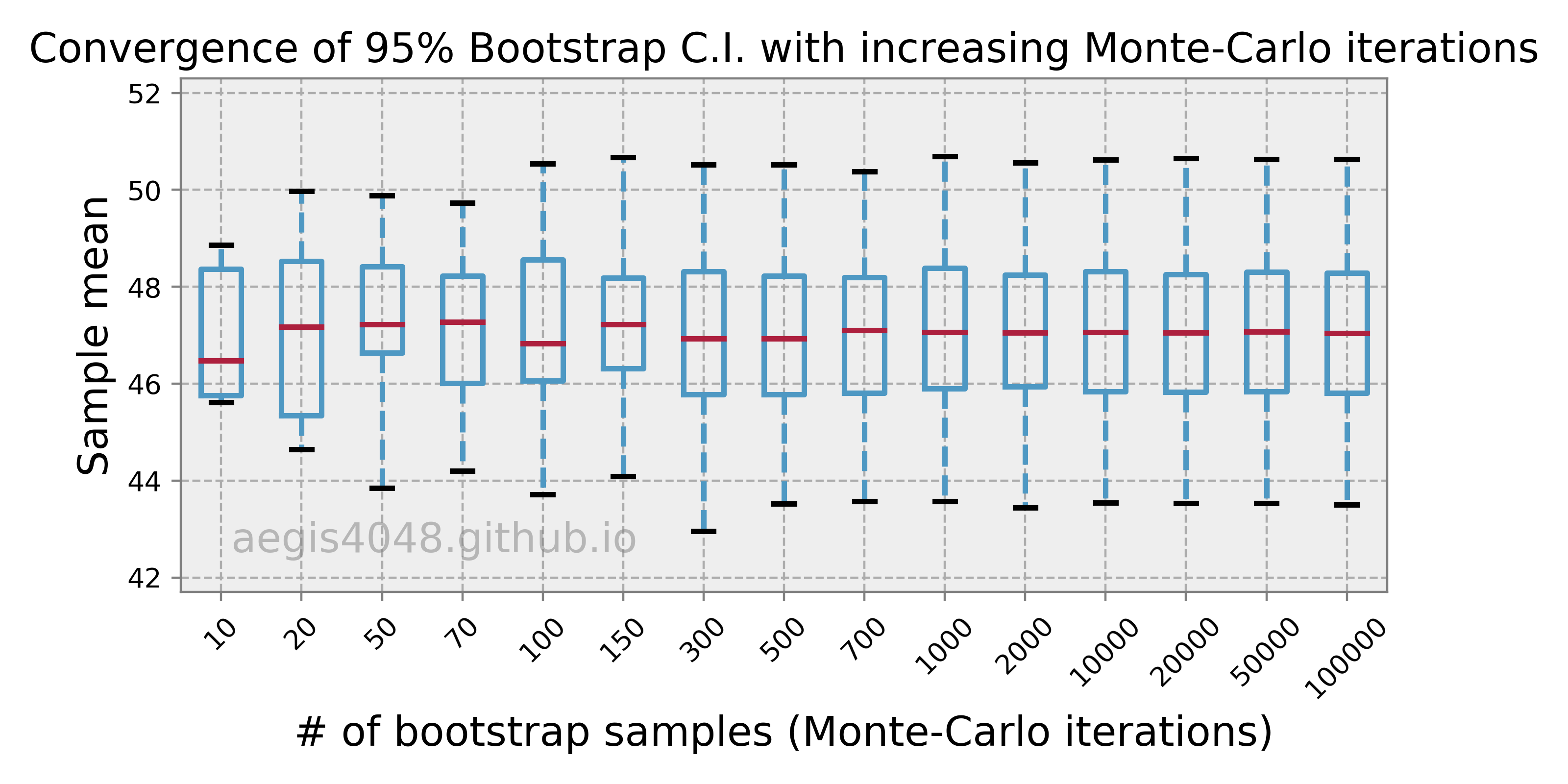

Because bootstrap relies on "random" resampling, the result of any statistical analysis performed with bootstrap can vary from time to time. The extent of variability depends on the number of bootstrap samples $r$, and $r$ should be large enough to guarantee convergence of bootstrap statistics to a stable value. Note that there's a distinction between the size of the original sample $n$ and the number of bootstrap samples $r$. We can't change $n$, but we can change $r$ because $r$ is equivalent to the number of Monte-Carlo iterations, which can be set by a statistician.

So how do we determine what value of $r$ is "large" enough to guarantee convergence of bootstrap statistics? You can do it by obtaining multiple bootstrap analysis results for increasing number of simulations $r$, and see if the result converges to certain range of values, as shown in figure (15). In the figure, it seems that $r=10,000$ is a good choice. But in practice, you want $r$ to be as large as possible, to an extent where the computational cost is not too huge. I've read a research paper where the authors used $r = 500,000$ to really ensure convergence.

Figure 14: Convergence of Bootstrap C.I.

Source Code For Figure (14)

import matplotlib.pyplot as plt

import numpy as np